Objective 2.2: Determine Physical Infrastructure Requirements for a VMware NSX Implementation

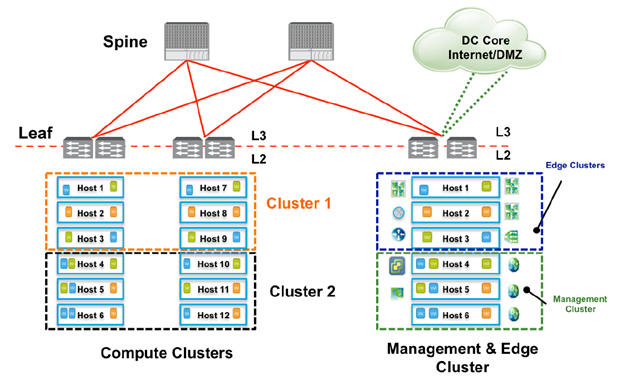

Discern management and edge cluster requirements

- Management Cluster

- Composed by including vCenter Server, NSX manager, NSX controller, Cloud Management Systems (CMS), and other shared components.

- Compute and memory requirements are pre-identified by the sum of the minimum supported configuration

- Enabling LACP is possible to improve resiliency of management components.

- Don’t require VXLAN provisioning (however in small design where Edge and Management cluster are collapsed, still become necessary)

- Compute Cluster

- Are designed with the following considerations

- Host density per rack and automation dependences

- Availability and Mobility of Workload

- Connectivity –> single VTEP vs multi VTEP

- Topology implication and IP processing for VTEP and vmkernel

- Lifecycle and workload drivers consideration

- Growth and changes

- Multi-rack, zoning

- Same 4 VLANs are required for each rack (Management, vMotion, Storage, VXLAN)

- Workload centric allocation, compliance SLA be met via:

- Cluster separation

- Separate VXLAN and transport zone

- Per tenant DLR and Edge routing domains

- DRS and resources reservation

- Are designed with the following considerations

- Edge cluster

- provide on-ramp and off-ramp connectivity to physical world

- Allow communication with physical devices connected in NSX L2 bridge

- Host and control DLR routing

- May have centralized logical or physical services

- NSX Controllers can be hosted in an edge cluster –> dedicated vCenter is used to manage compute and edge resources

- Edge cluster resources have anti-affinity requirement to protect the active-standby configuration or maintain bandwidth availability during failure

- Needs and Characteristics

- Edge VM is CPU centric with consistent memory requirements

- Additional VLAN are required for North-South routing and bridging

- Recommended teaming option for Edge hosts is “route based on SRC-ID”. Use of LACP is highly discouraged due to vendor specific requirements of route peering over LACP

Differentiate minimum/optimal physical infrastructure requirements for a VMware NSX implementation

- System requirements for NSX: You can install one NSX Manager per vCenter Server, one instance of Guest Introspection and Data Security per ESXi™ host, and multiple NSX Edge instances per datacenter

- Hardware (or virtual hardware)

- NSX Manager –> 16 GB (24 for certain deployment sizes)RAM , 4 vCPU (8 in certain sizes), 60 GB vmdk space

- NSX Controller –> 4 GB RAM, 4vCPU, 20 GB vmdk space

- NSX Edge Compact –> 512MB RAM, 1 vCPU, 500MB vmdk space

- NSX Edge Large –> 1 GB RAM, 2 vCPU, 500MB vmdk space + 512 MB

- NSX Edge Quad Large –> 1 GB RAM, 4 vCPU, 500MB vmdk space + 512 MB

- NSX Edge X-Large –> 8 GB RAM, 6 vCPU, 500MB vmdk space + 2 GB

- Guest introspection –> 1 GB RAM, 2vCPU, 4 GB vmdk space

- NSX Data Security –> 512 MB RAM, 1 vCPU, 6 GB per ESXi Host

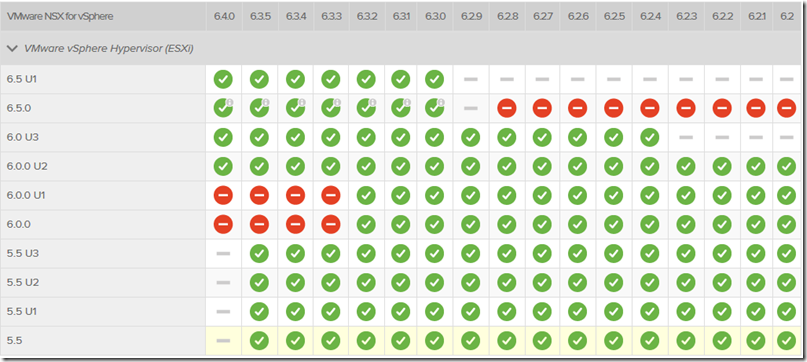

- Software

- Check interop matrix http://partnerweb.vmware.com/comp_guide/sim/interop_matrix.php

- ESXi

- vCenter

- NSX Manager to participate in a cross-vCenter NSX deployment the following conditions are required:

- NSX Manager >= 6.2

- NSX Controller >= 6.2

- vCenter Server >= 6.0

- ESXi >= 6.0 (must prepared with NSX vib >= 6.2 )

- Hardware (or virtual hardware)

Source: https://docs.vmware.com/en/VMware-NSX-for-vSphere/6.2/nsx_62_install.pdf

The component sizing (i.e., small to extra-large Edge) and configuration flexibility in the platform allows adoption of NSX in in across a wide scale of environments.Common factors affecting the sizing and configuration are as follows:

- The number of hosts in deployment

- small 3-10

- medium 10-100

- large > 100

- Workload behavior and selection of NSX components mixed with regular workload

- Multiple vCenter is not the requirements, though offers great flexibility and cross-VC mobility with NSX 6.2 and ESXi 6.x release

- NSX component placement restrictions depends on vCenter design, collapsed clustering options and other SLAs:

-

- Controller must exist in a vCenter where the NSX manager’s registers to

- Controller should reside in an Edge cluster when a dedicated vCenter is used to manage the compute and edge resources

- Must consider Edge component placement and properties as described in Edge Design & Deployment Considerations as well the Edge vCPU requirements

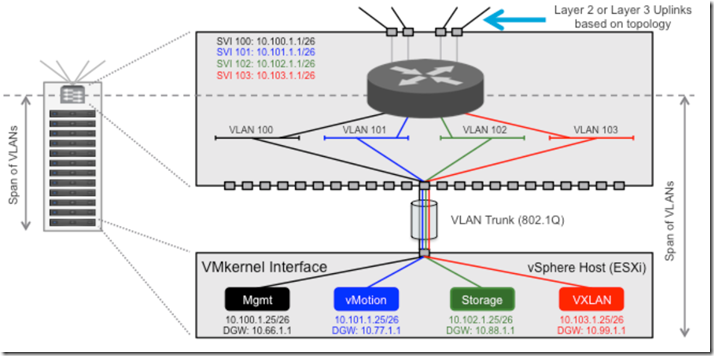

Determine how traffic types are handled in a physical infrastructure

- ESXi Host Traffic Types: Common traffic types of interest include overlay, management, vSphere,vMotion and storage traffic

- The overlay traffic is a new traffic type that carries all the virtual machine communication and encapsulates it in UDP (VXLAN) frames

- The other types of traffic are usually leveraged across the overall server infrastructure

- Different traffic types can be segregated via VLANs, enabling clear separation from an IP addressing standpoint

- In the vSphere architecture, specific internal interfaces are defined on each ESXi host to source those different types of traffic

- VXLAN Traffic: Virtual machines connected to one of the VXLAN-based logical L2 networks use this traffic type to communicate

- Management Traffic: is sourced and terminated by the management VMkernel interface on the host

- vMotion Traffic: vSphere vMotion VMkernel interface on each host is used to move this virtual machine state. On a 10GbE NIC, eight simultaneous vSphere vMotion migrations can be performed.

- Storage Traffic: A VMkernel interface is used to provide features such as shared or non-directly attached storage –> NAS and iSCSI

Determine use cases for available virtual architectures

NSX Primary Use Cases:

- Security –> Micro-segmentation, DMZ end2end and End-user security

- Automation –> IT Automation, Developer cloud. Multi-tenant infrastructure

- Application continuity –> Disaster Recovery Hybrid Cloud and Metro-Pooling

Describe ESXi host vmnic requirements

Differentiate virtual to physical switch connection methods

Compare and contrast VMkernel networking scenarios