After half a year from my first Silicon Valley experience during Cloud Field Day 2, I’ve gathered some useful info like trends, news and hints in cloud strategies of this last years. While a lot of companies already adopted public cloud environment for their IT service or as a way to start new business, a new trend suddenly is focusing to another perspective: be more dynamic moving data across cloud systems (on premise included).

Data and Services could be moved back?

Talking about the cloud adoption, sometimes there’s an hidden break point that literally shake the way of cloud changing when cost-benefits ratio suddenly move to the cost direction: quite all cloud providers are giving any kinds of tool and support to easily move workloads into the cloud without wasting time and data. But what about the ability to back into on-premise infrastructure? How about the costs for this operation?

Data in cloud provider is still a discussed topic. While the concept of data governance and localization is achieved, it’s availability is still in a shadow area, because the way to use my data is bounded to the availability of the cloud service.

(Source: https://cloudtweaks.com)

(Source: https://cloudtweaks.com)

Another argument is the way to consume data that is coming from another cloud provider. It sounds like the ancient story of “standards” in OS and applications that now is re-proposed in the cloud context.

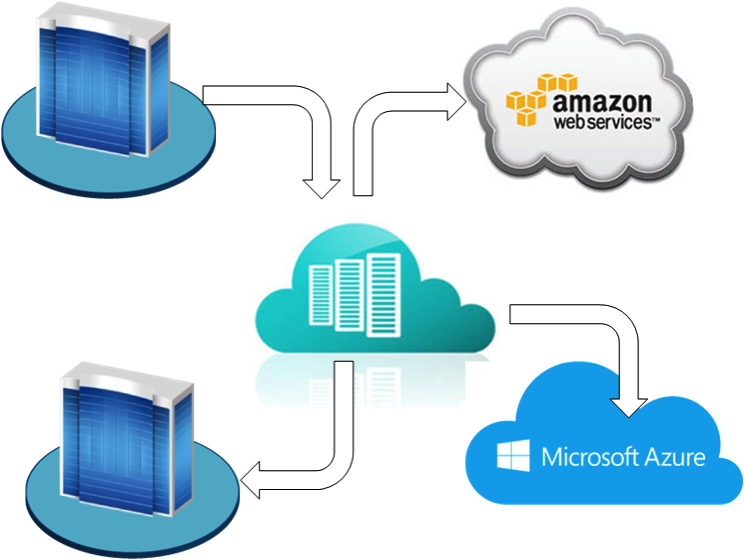

From the infrastructure perspective, not all cloud technologies are providing a full supported two-way directions to build a real hybrid solution able to bring VM (or the instances) back to home or into another cloud provider. The integration and a good architecture design could mitigate this risk using data and cloud automation as a principle card to move services on-premise or into another cloud provider.

The evolution of Platform as a Service to a more powerful and integrated environment to place and run your code is what is solving the problem to handle systems and infrastructures for a lot developers. In the same time, creating a hard boundary with the underline infrastructure is what IT guys are complaining with devops. In fact, staying in cloud with platform and the software as a service, means: you are the owner of your data and your code, but you’re not able to bring out your service since you haven’t the same environment.

Sysadmin and Developers are still in charge to make two fundamentals choices: the environment (cloud provider with platform and services) and the architecture. One element must compensate the lack of the other, and both are involved to build a hybrid solution that could meet costs and SLA during the service lifetime. And data consumption models must accommodate a way to show information regardless the place they are. What infrastructures, platforms and services could enable this flexibility?

A new role for storage systems

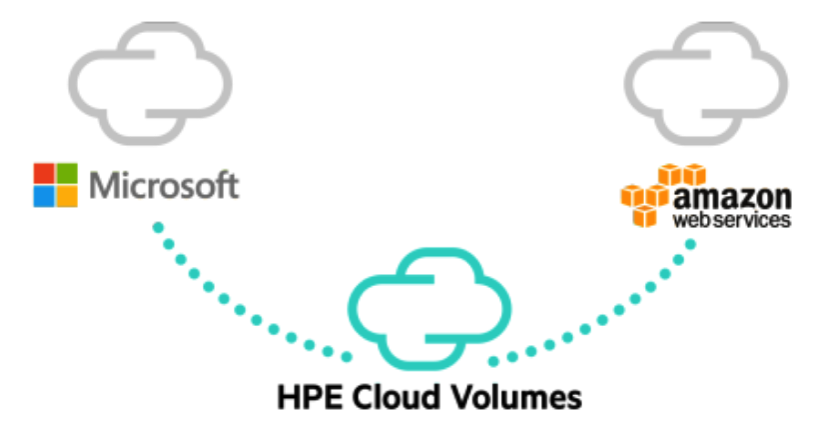

Storage vendors like HPE and NetApp are moving from the old selling perspective based on block, capability and performance to a complete solutions that are more focused on data: moving from on-premise infrastructure to public cloud, keeping the same behaviour means handle data in a more flexible way, ensuring availability and SLA.

Analyzing the data consumption from the infrastructure perspective, there are some changes that must be applied in order to achieve this elastic model:

- The application must be resilient from code level and platform must run in the same directions (in Italy is still a lack due culture)

- Data must be available and replicated to different cloud provider and platform, regardless the underline infrastructure and the geographic positions.

The experience in Scality is teaching how make available a huge capacity of data simply using a bunch of x86 hardware commodity and simply re-using a consumption model based on S3 protocol: a reliable way to store data across data-centers and in AWS. The result: writing code using S3 standardized api is possible move your application environment between cloud providers without rewriting the code.

Back to the infrastructure perspective, HPE is doing something more “cloud integrated” with Cloud Volumes. The extension from Azure to AWS, passing through the on-premise storage, is the core feature of a hybrid storage that could best handle and move data across cloud provider with a simple replication task. I’m still curious to see what will happen with this technology in 2018.

Using Backup solution to lift and shift

An interesting way to reduce the cloud lock-in is taking the advantage of backup software to move data across cloud environments: this could be a new trend to reduce the risks of data unavailability, using cloud API to bring the data into another “safe place”. But unexpectedly data in backup storage could be available to restore services into another place (or better in another cloud provider).

Rubrik, is demonstrating the ability to backup and transfer data from cloud instance to local instance using the same solution: a great cross-cloud disaster recovery.

While data are safe, services are still a challenge that could be addressed by the automation. Here the winning card is the meticulous separation between OS, application, services and data: if source environment is well-organized is possible to engage a series of automatism to re-deploy a new production system with fresh restored data. In the same way is possible test, restore or simply move environments across cloud providers.

Devops in the era of cloud consumption

In the world, there are different points of view about the emerging role of dev-ops: for someone is a mythical figure, that doesn’t exists and in the same time more and more companies are adopting this name to hire developer groups to develop the last generation apps.

Personally, I believe in this role in the measure where new companies are approaching to product and services using models and modalities that are completely different from the past.

In fact, new applications need some changes in roles and ways to produce and maintain the code: the evolution of developers methods called Continuous Integration and Continuous Deployment (Continuous Software Development) need to have resilient infrastructures and platforms as a base-line and a set of devops tools (like Kubernetes).

To accommodate this change, Software as a Service (or better Management Software as a Service) and Platforms play a fundamental role to integrate code, people and infrastructures.

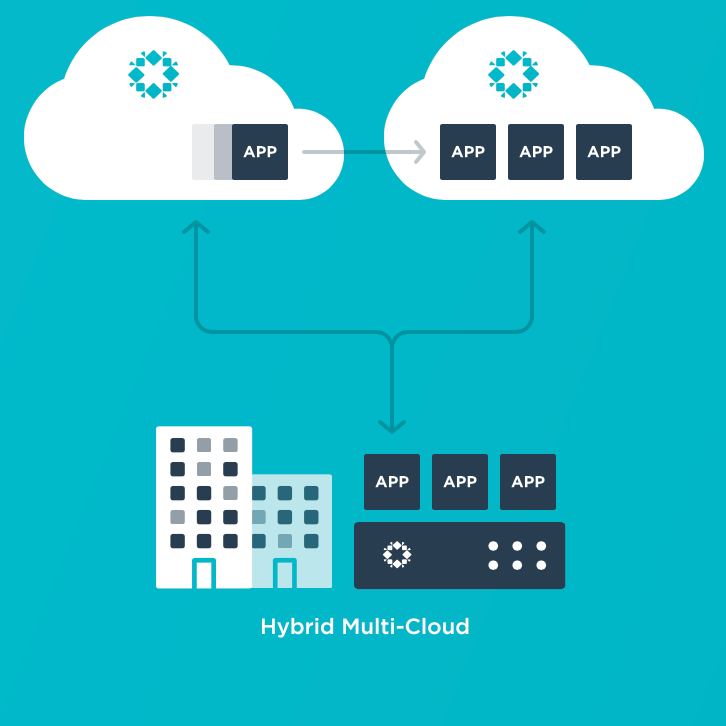

Nirmata, is an interesting Software as a Service that is closing the activity gaps between developers and sysadmin (now cloud architect) in a lot of scenarios: accessing from the same platform, both roles could find the right spaces to easily collaborate and deploy old to new applications.

Platform9 is Software as a Service management platform capable to watch ahead with Serverless deployment and keep an eye to the past with Hybrid infrastructure like Openstack.

Accelerite proposes a solution to close the gap between Enterprise and cloud providers. Based on Rovius Cloud, this solution is able to deploy a cloud environment that literally enable a local infrastructure to be ready for cloud expansion.

Cloud services more integrated and under control

The need to deliver services across private and public cloud requires the ability to integrate every “pieces” with less effort increasing also the visibility of the whole infrastructure regardless the ownership.

Two interesting solution were presented during Cloud Field Day: Gigamon and Service Now.

The experience brought by ServiceNow, really teach how to integrate everything regardless the place where they are, also with the ability to implement integrations in a more simplified way. A great platform for Enterprises to “connect” old and new IT assets with an eye to the organization needs.

Near a local infrastructure the visibility of cloud networking given by Gigamon, is a demonstration that you could light on every traffic without limits getting a gear to take instant decision and reacting in time on changes.

Wrapping-up

Moving into the cloud is still a process that deserve a particular attention comparing cost and benefits. While old infrastructure are suddenly moving into the cloud for many assets like infrastructures, platforms and software, the effort saved in terms of TCO in some case could increase the overall costs the long period.

In the same time, customers that chose cloud in the past are looking for a way to lift and shift their data, gaining more governance and increasing resiliency and protection.

New challenges are in the platform: the hybrid view in some technologies like Containers and Serverless, is the new fuel to develop physical and virtual infrastructures with more integrations between cloud providers.

Disclaimer: I have been invited to Cloud Field Day 2 by Gestalt IT who paid for travel, hotel, meals and transportation. I did not receive any compensation for this event and None obligate me to write any content for this blog or other publications. The contents of these blog posts represent my personal opinions about technologies presented during this event.