Containers and Kubernetes are definitive solutions to develop and deploy cloud-native applications. While the developer must produce code keeping in mind the abstraction of the underline systems, the infrastructure, and then deploy and delivery models are still a challenge for operations and infrastructure guys.

Probably a lot of cloud-native applications are borning in the cloud, but when it’s time to back to the on-premise infrastructure, probably a lot of projects will fail due to design mistakes. In the same way, lift and shift processes could be very critical and cause a lot of painful losses if a robust application work cycle pipelines are developed without considering the adoption of the abstractions cloud layer.

Here start a series of posts that will bring the focus on some best-practice to keep in mind and apply in order to well consume computing resources using Kubernetes.

Rethink your infrastructure

Hybrid cloud stands for the hybrid way to consume computing resources using a public and private cloud. In fact, the “cloud” concept implies the way to consume resources. This means that there’re a group of consumers which requires infrastructure resources with three magic words: on-demand, automatically and securely.

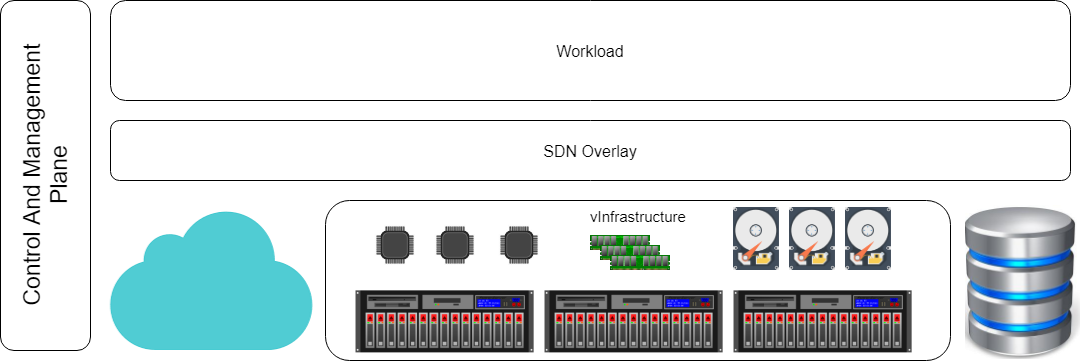

Kubernetes is one of the most important infrastructure consumer in order to deliver cloud-native applications. From the infrastructure perspective, a Kubernetes cluster is a bunch of servers that expose a complete platform to deploy and orchestrate applications and containers. If a cloud-native application is requiring more resources is important to have a provisioning system that automatically deploys additional servers. In the same way, to avoid waste of unused resources, the cluster could be contracted decommissioning the unneeded nodes from the cluster.

This elasticity should be transposed to the networking and physical layers too. Adding nodes means add:

- Network segments to permit the inter-container communications

- Physical CPU, Memory, Storage, and Networking

- Increase the environment surface that implies the increasing of operation effort and attack surface.

For these reasons when it’s time to rethink the on-premise infrastructure is important to enabling some key components which really open the doors to the cloud way:

- Hyper-convergence solutions like VMware vSAN, Nutanix and many more

- Scale-out and backup storage like Tintri, Cohesity, Rubrik and more

- Software Defined Network and security layer offered by VMware NSX (best in class) and many others like Nokia Nuage, CISCO

- Automation and orchestration tools like VMware vRealize Automation (or vCloud Director if you’re a cloud provider), OpenStack or custom solutions

- Operation tools like vRealize Operations and other 3rd party tools

Time to scale-out

What happens if the business is demanding other huge requests to infrastructure but you reach the limits? Probably if you’ve deployed all your projects using the silo model, your infrastructure will collapse soon or the business will evacuate all the workloads from your environment going directly into the public solution. This is a common mistake that people could overcome without spending important sums of money but breaking cultural and organizational barriers.

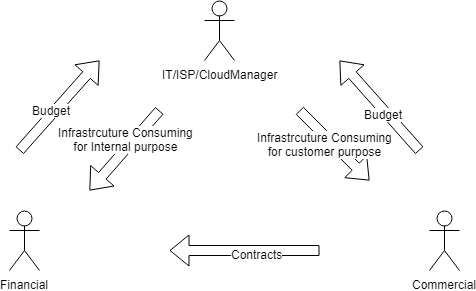

In fact if you think cloud all the organization must be cloud because all departments are consumer and providers in the same way: business consume resource and provide moneys, infrastructure departments consume money and provide resources, financial departments consume moneys and provide moneys:

Skills are changing, and during the transition periods is imporant to enable learing tools and training on the job.

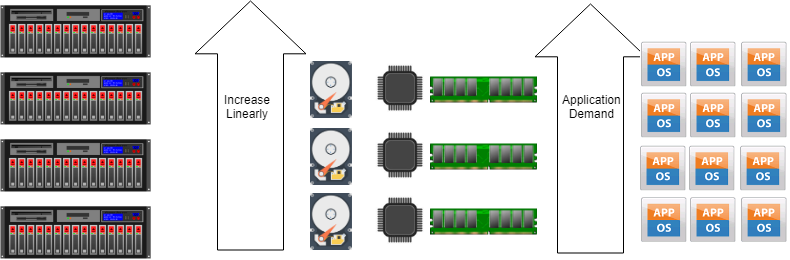

Back to the infrastructure, the hyper-convergence systems are the keys to expanding all resources linearly: every single computational block brings CPU, Memory, and Storage and scaling out means add another block to the cluster. In the old fashion infrastructure, a single storage array brings a lot of brakes during scale out process: capacity, performances, and maintenance could reach the storage area network limit, and the new big infrastructure expense is not a winning choice today.

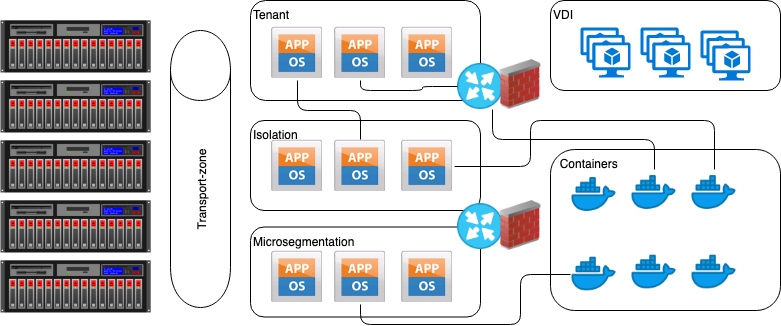

What about the network? Well, if you try to handle VLANs, physical wires and big firewalls to keep under control all the running systems, probably you’ll die between reactions to the on-demand request or the huge quantity of script you must write in order to ensure a sufficient automation grade. And from the security side, VMs and containers are increasing significantly the surface attack and the thrusting needed to the modern application to ensure a sufficient security level.

The answer stay again in the software: we already have seen the historical process where the physical systems become virtual infrastructure, now with the software-defined network, it is possible to automate and improve governance and security for every packet running across physical and virtual infrastructures.

Today the best in class remains VMware NSX, that with the release NSX-t claims to become a stand-alone SDN product, able to operate in physical and virtual infrastructure different from the classical VMware vSphere.

In the same way, scale-out network topology will not perform well using traditional topologies. In fact, the new trend is to simplify the transport layer introducing L2-L3 leaf spine deployment that ensures linear scalability without compromising bandwidth and reducing complexities derivates by spanning tree protocols, etc… Here the only commandment is bringing a resilient channel and deploy multiple networks using an overlay mechanism.

In the next post, we’ll dive into the cloud layer and finally the OS layer for containers and Kubernetes worker clusters. Stay tuned!