In my previous posts, I was talking about container as an architecture born to improve the Platform As a Service, where a Developer could rule every pieces and take decisions around the application without going in conflicts with the sysadmin rules. In few words containers are the architecture for the Cloud Native Development where DevOPS gains freedom to dev, deploy and operate the application. But from cloud perspective, the Container As a Service itself requires a revolution in the way to deliver it without missing important piecing about security, multi-tenancy, monitoring…

In the journey of Cloud Native Application, VMware is working to deploy a safe infrastructure where DEV and OPS guys could find a more comfortable place to work. vSphere Integrated Containers few day ago was the only way, for VMware, to integrate a vSphere platform with the container architecture. But seems that emerging technologies, specially in Industry 4.0, are changing the needs to develop a cloud service in a more rapid way and without taking care about the underline resources. This is the scenario that big cloud providers are covering in this last year with Serverless architecture.

But in the meantime, VMware Pivotal is working on delivering an on-premise platform for Function As a Service: a more simple way to deliver micro-services demanding availability, scalability, performance and security to the underline infrastructure. Pivotal Container Service (PKS) is the announcement during VMworld 2017 (Las Vegas) that is signing another milestone in the world of Cloud Native Application. Let’s see in depth.

More on Serverless and Function as a Service

Before going deep inside PKS is important to gain more feeling with the concept of Serverless and Function As a Service.

From Wikipedia: Serverless computing is a cloud computing execution model in which the cloud provider dynamically manages the allocation of machine resources. Pricing is based on the actual amount of resources consumed by an application, rather than on pre-purchased units of capacity.

In simply words: you could start your application deploing all elements and pay when used.

But in a more wide vision, the Serverless architecture is composed:

- Runtimes: a simply and stateless code execution that doesn’t care in which server is running and how to scale in/out to provide result in few time as possible (the quality of this service could be measured in terms of response latency)

- Databases: a RDBMS system that doesn’t care of the underline system which the database is running or the amount of resources required to store and query the data.

Focusing to Runtimes only, the ability to provide functions or implementing the existing functions, is what Function As a Service is going to do… Again from Wikipedia:

Function as a service (FaaS) is a category of cloud computing services that provides a platform allowing customers to develop, run, and manage application functionalities without the complexity of building and maintaining the infrastructure typically associated with developing and launching an app.

Again in simply words: the stateless (aka microservices) part of the Serverless world.

But some infrastructure guys could complain some points that could create misunderstandings the real meaning of Serverless: under the hood, the ability of an elastic infrastructure could be realized only if a physical infrastructure is integrated with some software automations that cares about ephemeral deployment and scaling at request. What is not under “human” governance is the automatic instance deployment every time a “function” is called. Watching the past, seems the same issues when someone delivered a container in cloud using IaaS: the term DevOps was coined for this reason: who is developing using container platform as a service is involved in operations tasks: like resource provision, CI/CD, clustering, etc…

The tendence is of more and more software companies are literally outsourcing the sysadmin staff.

And the question is: are all DevOps really able to substitute the sysadmin job during operations?

I could answer this question with two views:

- If a Developer operate with simplicity using all cloud elements and the company feels safe and well working in this way and it isn’t necessary have a sysadmin behind the deployment and operations, but the importance of choosing the right cloud provider with improved secuirty and tools, become mission critical.

- If a company is delivering services across private and public cloud, the need to have an on-premise platform and a sysadmin that takes care on deployment, security and whatever concerning multiple infrastructures, becomes the priority.

What happens in the world of function as a service? FaaS in hybrid deployment is what Pivotal Container Service is able to do bringing the security of in internal infrastructure near the ability to scale function across cloud providers.

PKS: Pivotal Container Service by VMware

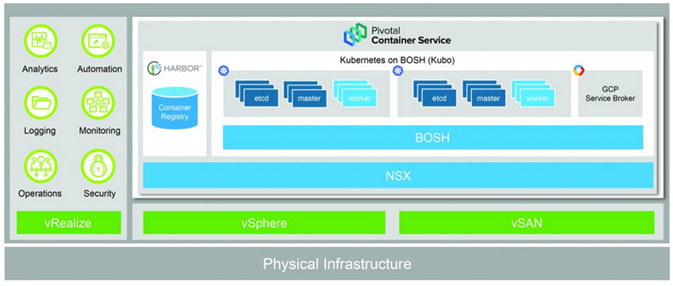

Staring with an open-source project called Kubo (https://github.com/cloudfoundry-incubator/kubo-release), a BOSH (http://bosh.io/) release for Kubernetes (https://kubernetes.io/) with Harbor (a container registry) the idea around PKS is bring these PaaS elements upon vSphere (and vSAN) and NSX (the cloud foundation) and build an infrastructure serverless that could bring the code as a function that could be delivered and managed during the application lifetime. In this case, the support and the ability to build a multi-tenancy environment is what is need to realize an in-premis Function as a Service.

Highlighting the element called GCP Service Broker (Google Container Platform), is naturally understand how could be possible build and scale-out every function built in premise across public in cloud (in this case Google)

Another important key element is represented by the security: the integration with NSX is the added value of a vSphere environment: unlike the other bare-metal or non vSphere platform, the advantage of the micro-segmentation applied to every container, is the ultimate technology that guarantee security by design and dynamically when the “attack surface” is changing during the time and during the utilization.

Obviously in the VMware’s house, the integration with vRealize suite is the complementary piece of integration to bring this platform in the enterprise context.

The initial availability is scheduled in Q4 with these channels:

- via channel (Pivotal and VMware)

- via Cloud Provider Program

- bundled with DELLEMC VxRail and VxRack

Don’t forget to try its opensource starting project Kubo here: https://pivotal.io/partners/kubo ;

More on PKS here: https://blogs.vmware.com/cloudnative/2017/08/29/vmware-pivotal-container-service/

During VMworld

There are plenty use cases about FaaS and Serverless, but there is one that IMHO deserves more attention in the Industry 4.0: The Internet Of Things. In fact, this in IoT there is a “chain”, where IoT devices generate data, that are collected by a broker, then elaborated by “functions” being the source for next elaborations (like BI, Analytics).

In an on-premise scenario is important to highlight the underline infrastructures: delivering FaaS, with quite the same behavior of the public cloud (except the huge amount of available resources), is a huge expanse of money and resources because other than a scale-out hardware, it requires a robust infrastructure with the sufficient security at scale. Without the right security, IoT could be a failure.

Just check the keynote video here:

[vsw id=”to7ADWK86AU” source=”youtube” width=”425″ height=”344″ autoplay=”no”]

(You’ll find this argument near the time 1h 26m)

Don’t forget to check the official news coming from the VMware office of CTO Blog: https://octo.vmware.com/serverless-faas-cloud-services-vsphere-yes/

Other FaaS and Serverless projects

Thanks to Julian Wood that, during Cloud Field Day 2, briefly, introduce me the concepts of Serverless, I started my journey to this world focusing my attention on these projects:

Today Serverless is used by new companies 100% focused on application development; IT companies that needs integration and application scale out, are still worried about this adoption due to security, if the service is running completely out of the EDP and technical issues, if FaaS is completely run on-premise. For this reason VMware seems to bring a middle position, like happened with Software Defined Datacenter, putting and moving the “functions” across private and public cloud, abstracting the platform but without reinventing the wheel and using existing template and models development used in cloud. For this reason I suggest to every CTO, to give a try of this platform when your developer is ready for Functions or if you’re moving to IoT integration.

![image_thumb2[1] image_thumb2[1]](https://blog.linoproject.net/wp-content/uploads/2017/10/image_thumb21_thumb.png)