I’m writing this post to highlight some advanced vSphere topics during my VCP6 Study. Sources for this post are:

- vSphere 6 Official Docs (https://pubs.vmware.com/vsphere-60/index.jsp#com.vmware.vsphere.networking.doc/GUID-35B40B0B-0C13-43B2-BC85-18C9C91BE2D4.html)

- KB 2051826 https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2051826

- KB 2034277 https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2034277

- Link aggregation and LACP Basics https://www.thomas-krenn.com/en/wiki/Link_Aggregation_and_LACP_basics

Networking policies

You apply networking policies differently on vSphere Standard Switches and vSphere Distributed Switches:

- vSphere Standard Network Switch (vSS)

- Entire Switch

- Standard Port Group (pg)

- vSphere Distributed Network Switch (vDS)

- Distributed Port Group (dvpg)

- Distributed Ports (dp)

- Uplink PortGroup (upg)

- Single Uplink port (up)

Following the available policy:

- Teaming and Failover (vSS and vDS): use NIC teaming to connect a virtual switch to multiple physical NICs on a host to increase the network bandwidth of the switch and to provide redundancy

- Load Balancing Policy: determines how network traffic is distributed between the network adapters in a NIC team

- Network Failure Detection Policy:

- Link status only: detects failures, such as removed cables and physical switch power failures. However, link status does not detect the following configuration errors:

- Physical switch port that is blocked by spanning tree or is misconfigured to the wrong VLAN

- Pulled cable that connects a physical switch to another networking devices, for example, an upstream switch

- Beacon probing: Sends out and listens for Ethernet broadcast frames, or beacon probes, that physical NICs send to detect link failure in all physical NICs in a team. It’s most useful to detect failures in the closest physical switch to the ESXi host, where the failure does not cause a link-down event for the host. NOTE: Use beacon probing with three or more NICs in a team because ESXi can detect failures of a single adapter

- Link status only: detects failures, such as removed cables and physical switch power failures. However, link status does not detect the following configuration errors:

- Failback Policy

- Notify Switches Policy: can determine how the ESXi host communicates failover events

- Load Balancing Algorithms Available for Virtual Switches:

- Route Based on Originating Virtual Port: the virtual switch selects uplinks based on the virtual machine port IDs on the vSphere Standard Switch or vSphere Distributed Switch

- Advantages: event distribution of the traffic, low resources consumption, no changes in physical switch

- Disadvantages: vSwitch is not aware to the traffic load, the bandwidth available for the virtual machine is limited to the speed of the vminc associated.

- Route Based on Source MAC Hash: The virtual switch selects an uplink for a virtual machine based on the virtual machine MAC address

- Advantages: more even distribution than route based Originating virtual port, VM traffic is the same because MAC address is static, no changes in physical switch

- Disadvantages: the bandwidth available for the virtual machine is limited to the speed of the vminc associated, Higher resource consumption than Route Based on Originating Virtual Port, The virtual switch is not aware of the load of the uplinks

- Route Based on IP Hash: he virtual switch selects uplinks for virtual machines based on the source and destination IP address of each packet. To ensure that IP hash load balancing works correctly, you must have an Etherchannel configured on the physical switch.

- Limitations and Configuration Requirements:

- ESXi hosts support IP hash teaming on a single physical switch or stacked switches

- ESXi hosts support only 802.3ad link aggregation in Static mode

- must use Link Status Only as network failure detection with IP hash load balancing

- must set all uplinks from the team in the Active failover list

- The number of ports in the Etherchannel must be same as the number of uplinks in the team

- Advantages: A more even distribution of the load compared to Route Based on Originating Virtual Port and Route Based on Source MAC Hash, higher throughput for virtual machines that communicate with multiple IP addresses

- Disadvantages: Highest resource consumption compared to the other load balancing algorithms, virtual switch is not aware of the actual load of the uplinks, Requires changes on the physical network, complex to troubleshoot

- Limitations and Configuration Requirements:

- Route Based on Physical NIC Load: Route Based on Physical NIC Load is based on Route Based on Originating Virtual Port, where the virtual switch checks the actual load of the uplinks and takes steps to reduce it on overloaded uplinks. This is only available on dVS.

-

- Advantages: Low resource consumption because the distributed switch calculates uplinks for virtual machines only once and checking the of uplinks has minimal impact, The distributed switch is aware of the load of uplinks and takes care to reduce it if needed, No changes on the physical switch are required

- Disadvantages: The bandwidth that is available to virtual machines is limited to the uplinks that are connected to the distributed switch

-

- Use Explicit Failover Order

- Route Based on Originating Virtual Port: the virtual switch selects uplinks based on the virtual machine port IDs on the vSphere Standard Switch or vSphere Distributed Switch

- Security (vSS and vDS and also dvpg, pg, dp)

- Promiscuous Mode (if reject it restricts the reception of frames only to VMs which are addressed)

- MAC Address change (if reject the guest OS changes the effective MAC address of the virtual machine to a value that is different from the MAC address of the VM network adapter, the switch drops all inbound frames to the adapter.)

- Forget transmit (if reject the switch drops any outbound frame from a virtual machine adapter with a source MAC address that is different from the one in the vmx configuration file)

- Traffic Shaping (only outbound in vSS and out/in-bound in vDS)

- Status (enabled/disabled)

- Average Bandwidth: number of bits per second to allow across a port, averaged over time

- Peak Bandwidth: The maximum number of bits per second to allow across a port when it is sending a burst of traffic

- Burst Size: The maximum number of bytes to allow in a burst. If this parameter is set, a port might gain a burst bonus when it does not use all its allocated bandwidth

- VLAN (vSS and vDS) in this options:

- None (don’t use VLAN tagging)

- VLAN 1-4094 for virtual switch tagging (VST)

- VLAN Trunking (VGS)

- Private VLAN (only dvpg or dp)

- Monitoring (vDS only): enable or disable netflow monitoring for dvpg or dp

- Traffic Filtering and Marking (vDS only): in a vSphere distributed switch 5.5 and later, by using the traffic filtering and marking policy, you can protect the virtual network from unwanted traffic and security attacks or apply a QoS tag to a certain type of traffic. You could enable this feature on:

- dvpg or uplink port group

- dp or uplink ports

- Resource Allocation (vDS only): The Resource Allocation policy allows you to associate a distributed port or port group with a user-created network resource pool.

- on a dvpg these are the requirements:

- Enable NIOC

- Create and configure network resource pool

- on a dp these are the requirements:

- Enable NIOC

- NIOC version =2

- Create and configure network resource pool

- enable port-level overrides for resource allocation policy

- on a dvpg these are the requirements:

- Port Blocking (vDS only): on a dvpg, dp and uplink ports.

To apply different policies for distributed ports, you configure the per-port overriding of the policies that are set at the port group level. It is also possible enable the reset of any configuration that is set on per-port level when a distributed port disconnects from a virtual machine. Available options under under dvpg, settings, advanced:

- Configure reset at disconnect

- Override port policies

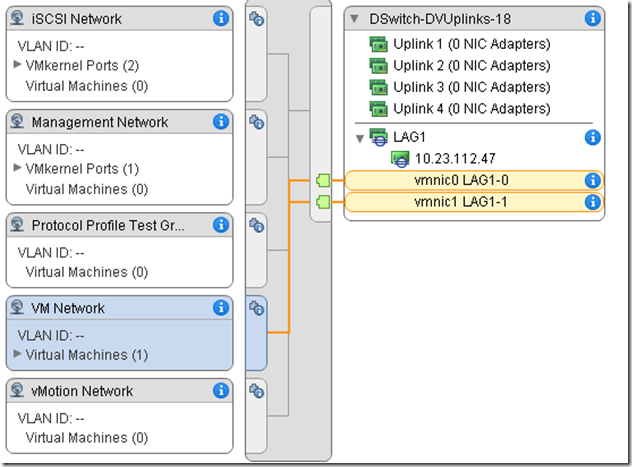

Enhanced LACP Support

Coming with version 6 there is a vSwitch Uplink LACP support to connect every host with physical switch using lacp hash to ensure load balancing and failover.

- This feature is available with version esxi >= 5.5 and vcenter >= 5.5 and distributed virtual switch >= 5.5

- Distributed Virtual Switch is a prerequisite (available only in Ent+ license)

- Creating a LAG on a distributed switch, a LAG object is also created on the proxy switch of every host that is connected to the distributed switch

- You can create up to 64 LAGs on a distributed switch. A host can support up to 32 LAGs

On physical switch, for each host on which you want to use LACP, you must create a separate LACP port channel with this requirements:

- The number of ports in the LACP port channel must be equal to the number of physical NICs that you want to group on the host.

- The hashing algorithm of the LACP port channel on the physical switch must be the same as the hashing algorithm that is configured to the LAG on the distributed switch. Available Options:

- Source and destination IP address and VLAN

- Source and destination IP address TCP/UDP Port and VLAN

- Source and destination Mac address

- Source and destination TCP/UDP Port

- Source Port ID

- VLAN

- All physical NICs that you want to connect to the LACP port channel must be configured with the same speed and duplex

Convert to the Enhanced LACP Support on a vSphere Distributed Switch

After upgrading a vSphere Distributed Switch from version 5.1 to version 5.5 or 6.0, you can convert to the enhanced LACP support to create multiple LAGs on the distributed switch. Prerequisites:

- Verify that the vSphere Distributed Switch is version 5.5 or 6.0.

- Verify that none of the distributed port groups permit overriding their uplink teaming policy on individual ports.

- If you convert from an existing LACP configuration, verify that only one uplink port group exists on the distributed switch

- Verify that hosts that participate in the distributed switch are connected and responding

- Verify that you have these privileges:

- dvPort group.Modify on the distributed port groups on the switch

- Host.Configuration.Modify privilege on the hosts on the distributed switch

Configuration for Distributed Port Groups

To handle the network traffic of distributed port groups by using a LAG, you assign physical NICs to the LAG ports and set the LAG as active in the teaming and failover order of distributed port groups:

- Active –> Single LAG (only use one active LAG or multiple standalone uplinks to handle the traffic of distributed port groups . You cannot configure multiple active LAGs or mix active LAGs and standalone uplinks)

- Standby –> Empty (Having an active LAG and standby uplinks and the reverse is not supported. Having a LAG and another standby LAG is not supported)

- Unused –> All standalone uplinks and other LAGs if any

Configure LAG to Handle Traffic for dvs

Newly created LAGs do not have physical NICs assigned to their ports and are unused in the teaming and failover order of distributed port groups. To handle the network traffic of distributed port groups by using a LAG, you must migrate the traffic from standalone uplinks to the LAG. Prerequisite:

- a separate LACP port channel exists on the physical switch

- vSphere Distributed Switch version >= 5.5 (or 6.0)

- enhanced LACP is supported on the distributed switch

Steps:

- Create a LAG in dvs (DVS –> Manage –> Settings –> LACP)

- Set LAG as Standby in Teaming Failover Order in all distributed Port Groups

- Assign Physical NIC to LAG. Prerequisites:

- Verify that either all LAG ports or the corresponding LACP-enabled ports on the physical switch are in active LACP negotiating mode.

- Verify that the physical NICs that you want to assign to the LAG ports have the same speed and are configured at full duplex.

- Set LAG as Active in Failover Order in the Distributed Port Group

LACP negotiating mode for the uplink port group:

- Active: (all ports active) The uplink ports initiate negotiations with the LACP-enabled ports on the physical switch by sending LACP packets

- Passive: (all ports passive) They respond to LACP packets that they receive but do not initiate LACP negotiation

LACP 5.1 Support

It is possible to enable LACP support on an uplink port group for vSphere Distributed Switches 5.1, and for switches upgraded to 5.5 or 6.0 that do not have the enhanced LACP support. Prerequisites:

- a separate LACP port channel exists on the physical switch per each host where you want to use LACP.

- distributed port groups have their load balancing policy set to IP hash

- LACP port channel on the physical switch is configured with IP hash load balancing

- distributed port groups have network failure detection policy set to link status only

- distributed port groups have all uplinks set to active in their teaming and failover order

- all physical NICs that are connected to the uplinks have the same speed and are configured at full duplex

[…] https://blog.linoproject.net/vcp-6-study-note-advanced-networking-concepts-part1-networking-policies… […]