Depending on your licensing, it could be useful the knowledge how to migrate virtual from Standard to Distributed and vice-versa. Talking about Virtual Network, there are some elements that always bring al lot of system and network administrators worried about the choosing of how to combine physical and virtual networking, and these are the stuffs to demistify to proceed safe and conscious using virtual switches:

- Distributed virtual switch doesn’t need vCenter to be available during data flow between VM and vSwitch. Unfortunately I heard about this a lot even if is using vSphere > 4.0.

- vSphere 4.1 is outdated (really outdated!), the migration to higher version doesn’t mean a vRAM extra bid like happened in vSphere 5.0. vSphere 5.5 was the best solution to deploy production infrastructure about 3 years ago. Now it’s used only for 6.x transition purpose. For these reason: migrate to last “production” available version to gain new features

- Distributed virtual switch enables advanced features and the ability install vib packages eg.: VXLAN and Nexus 1000V (Nexus vib will be discontinued in 2018)

- Virtual switches are bringing the best of physical switch without creating loop or handling spanning-tree protocol. For this reason say to your network admin :- keep calm and let vmware admin do his work! –

- Virtual infrastructure are networking intensive even if the production traffic stays under 1Gb. This becuase other infrastructure traffic like vMotion and backup requires more available bandwidth as possible to guarantee operational tasks. For this reason never share your infrastructure network with other services: use a dedicated 2n switches on top of the rack with 802.1q.

- In some scenarios, if you feel safe with the use of NSX, you could ask to your Network admin only few VLANs (Management, DMZand services) at least 1600MTU (Jumbo Frame is the top)… All network deployments and operations could be handled in vSphere environment with the use of VXLAN, DLR and FLR.(More on this blog stay tuned!).

Migrating from VSS to DVS with user interface

Realizing this operation is really simple, in this example I’ll show how to move:

- physical uplink (vminc)

- virtual machine portgroup

- virtual machine ports

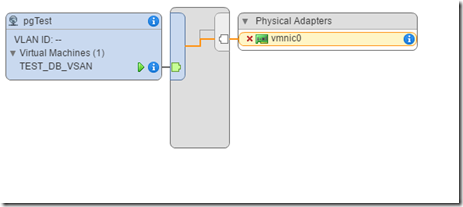

Because I’m under migrations I couldn’t show the cool ui v. 6.5… Under Hosts and Clusters > [the single host] > Manage > Networking, Standard vSwitch topology looks like the following :

NOTE: for this example the available physical nics are disconnected because I’m using a lab in a piece of production environment. Let’s assume that vminc0 and vmnic1 are physcially connected with 2 switches in stack with the same VLANs.

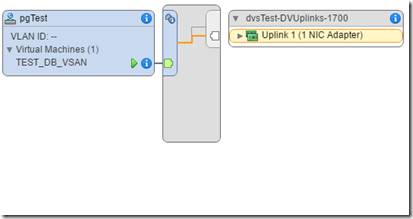

There’s a VM connected to justify that you could do this operation with near 0 interruption (depending on your physical networking it could be happens 1 or 2 ping loss). Next I’ll show the distributed switch (under Networking > [Datacenter] ):

The Distributed vSwitch creation is really simple: Just right click under your vDatacenter in Network tab and few steps choosing version and default port group. In case of migration, is enough to specify the version and the number of uplink depending on your physical connection available in the standard switch.

Note: before start the migration is important to have the same portgroup vlan ID with a similar name to recognize it during VM migration.

Time to migrate:

- Right click to Distribute vSwitch

- Choose Add-Manage Hosts

- Choose “Add host and manage host networking”

- With “New Hosts” choose the host in the cluster

- Choose all network adapter tasks (useful if you have a vmKernel to migrate)

- In “manage physical adapter” choose the physical nic already used in Standard vSwitch to migrate

- Go further the step vmkernel and Analyze impact, because in this case we don’t have any of that

- In migrate VM networking expand the VM that is already connected to the standard vSwitch

- At ready to complete all tasks will be done

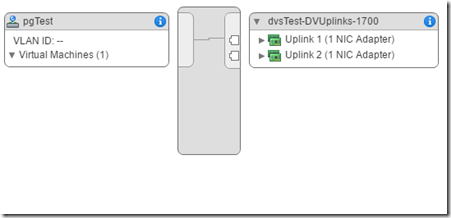

Note: in vmKernel portgroup migration it could be happens that something goes wrong due to physical or human error connection. To guarantee that the host will be reachable, to operation will roll-back automatically. Every sysadmin appreciates this feature. This is the result:

Obviously working with 2 physical nics guarantee the real 0 downtime during networking migration.

From DVS to VSS with powercli

It is possible to realize downgrade migration with user interface, but in a large environment it could be a repetitive work that could be scriptable.

Let’s see how…

Note: at least 2 physical nic is mandatory to realize the following operations. In my case I start adding one more available physical nic:

The first important step to do is create vSwitch. This operation for every host could be long, repetitive and the change to make human error is really high. So, let’s start using PowerCLI connecting to the vCenter and creating vSwitch0 at all hosts in the cluster:

|

1 2 3 4 5 6 7 8 |

Connect-VIServer –Server [vcenter fqdn or ip] $vdc = Get-Datacenter –Name $vDCName $hosts = $vdc | Get-VMHost foreach ($singlehost in $hosts){ echo $singlehost $vss = New-VirtualSwitch -VMHost $singlehost -Name "vSwitch0" } |

Note: you could use all these scripts to perform vDC standard switch creation as an alternative to ui; it could be useful in environment with huge number of host but without the using of distributed switch due the level of licensing available (< Ent+).

|

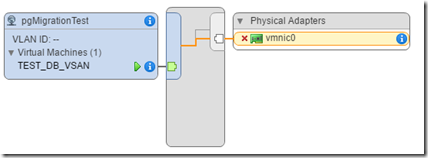

1 2 3 4 |

$vSwitches = $vdc | Get-VMHost | Get-VirtualSwitch -Name "vSwitch0" foreach ($vSwitch in $vSwitches){ New-VirtualPortGroup -Name "pgMigrationTest" -VirtualSwitch $vSwitch -VLanId 0 } |

The portgroup creation routine concludes the vSS preparation for migration. Now it’s time to move 1 physical nic to make the connectivity flowing to the created vSwitch:

|

1 2 3 4 5 6 |

$hosts = $vdc | Get-VMHost foreach ($singlehost in $hosts){ $vss = Get-VirtualSwitch -VMHost $singlehost -Name "vSwitch0" $pnic0 = $singlehost | Get-VMHostNetworkAdapter -Physical -Name "vmnic0" Add-VirtualSwitchPhysicalNetworkAdapter -VirtualSwitch $vss -VMHostPhysicalNic $pnic0 } |

Now the switch is ready to accept VM networking; the following routine get all VM in virtual datacenter and if there is a connection with the distributed switch portgroup, it makes the change:

|

1 2 3 4 5 6 7 8 |

$vms = $vdc | Get-VM foreach ($vm in $vms){ $vm | Get-NetworkAdapter | ForEach-Object { if ($_.NetworkName -eq "pgTest"){ $networkadapter = $_ | Set-NetworkAdapter -NetworkName 'pgMigrationTest' } } } |

Here the results:

Well! With no more VMs in Distributed vSwitch it’s possible to move the other pnic to the other vSwitches to guarantee the failover:

|

1 2 3 4 5 6 |

$hosts = $vdc | Get-VMHost foreach ($singlehost in $hosts){ $vss = Get-VirtualSwitch -VMHost $singlehost -Name "vSwitch0" $pnic1 = $singlehost | Get-VMHostNetworkAdapter -Physical -Name "vmnic1" Add-VirtualSwitchPhysicalNetworkAdapter -VirtualSwitch $vss -VMHostPhysicalNic $pnic1 } |

That’s all folks!