During the last year, two big announcements happened in Hewlett Packard Enterprise: the enterprise company reborn from a spinoff of the one of the oldest IT company in the world (since IT revolution in 1976) acquires Simplivity and Nimble Storage (full history here: https://news.hpe.com/timeline/our-history/). As written on my previous post, Nimble Storage is an interesting predictable storage, able to dynamically deliver applications storage resources that meet availability and performances reducing time and money for tuning the storage itself.

In my first TechFieldDay Extra VMworld in 2016, I saw Nimble integration with VMware vSphere environment which using storage profiles is possibile to attach the desired label to a VM, communicating to the storage system the needs in term of capacity and IOPS. As consequence, the storage knows where to place the vmdk in the correct “region” of the the array keeping the metrics and watching what is going during Virtual Machine life-cycle.

Now this technology is going further: it becomes a “Multi Cloud Storage”. Let’s see in depth and what was going on the Cloud Field Day 2.

Why make a Cloud Storage?

During the past years, a growing part of companies moved their business completely into the cloud, reducing the Total Cost of Ownership and ensuring scale out and unpredictable stuffs around the application lifetime. In fact, the adaptive approach was the winning card to code less and deliver applications at every costs! But calculating the annual cost and the projection across more than there years, seems that the full cloud adoption, or better choosing a single cloud provider, couldn’t be so cost effective and the migration across cloud provider is not an easy way.

Every application needs an environment to run that is composed by a physical or virtual instance composed at least by CPU, Memory, Network and disk and an operative system. Then is possibile to install the libraries, applications, frameworks and whatever to build or deploy application and data. Choosing the cloud provider that fits these need is a child play! Everyone are able to offer it with more o less feature that could distinguish one cloud provider from another. But the standards, the formats or the underline implementation is what every cloud provider founded their proposition and could be empower the runtime execution while discouraging the VM migration. For example have you tried to migrate an instance from Amazon EC2 to vSphere or Azure? There is no conventional way, because EC2 instances aren’t virtual machines!

The trend of the cloud adoption is changing to hybrid direction: the need to lift and shift data at the change of business becomes a must to reduce the cost and is one of the key part that build a new baseline when a cloud specialist delivers an application. But near some solutions that automate the data movement, the effort to transfer workloads is still high and depends on source and target infrastructures. How to speed up this movement process without compromision? How to gain visibility in terms of performances? How to synchronize data across cloud providers?

The ability to “share” storage resources across cloud provider such as AWS and Azure is the first objective for HPE Nimble Cloud Volumes: an enterprise multi cloud storage able to provide data mobility across cloud systems. This “Pay as you Go” service provides an alternative of EBS storage or Azure Disks, where to place instances and take snapshot, clones or move workloads across public and private cloud. The benefits are:

- Replicate data across cloud providers

- Take snapshots

- Gain visibility and move data

- Pay per use

More technically… during Cloud Field Day 2

During Cloud Field Day the introduction by Calvin Zito, was an important moment to know what the company HPE is doing after the split of the HP (the storyline here: https://news.hpe.com/timeline/our-history/) and what’s inside the presented technologies.

[vsw id=”wIsdCXyul64″ source=”youtube” width=”425″ height=”344″ autoplay=”no”]

More technically Doug Ko and Sandeep Karmarkar presented the architecture and the live demo of Cloud Volumes.

[vsw id=”pPuQetP7ZWI” source=”youtube” width=”425″ height=”344″ autoplay=”no”]

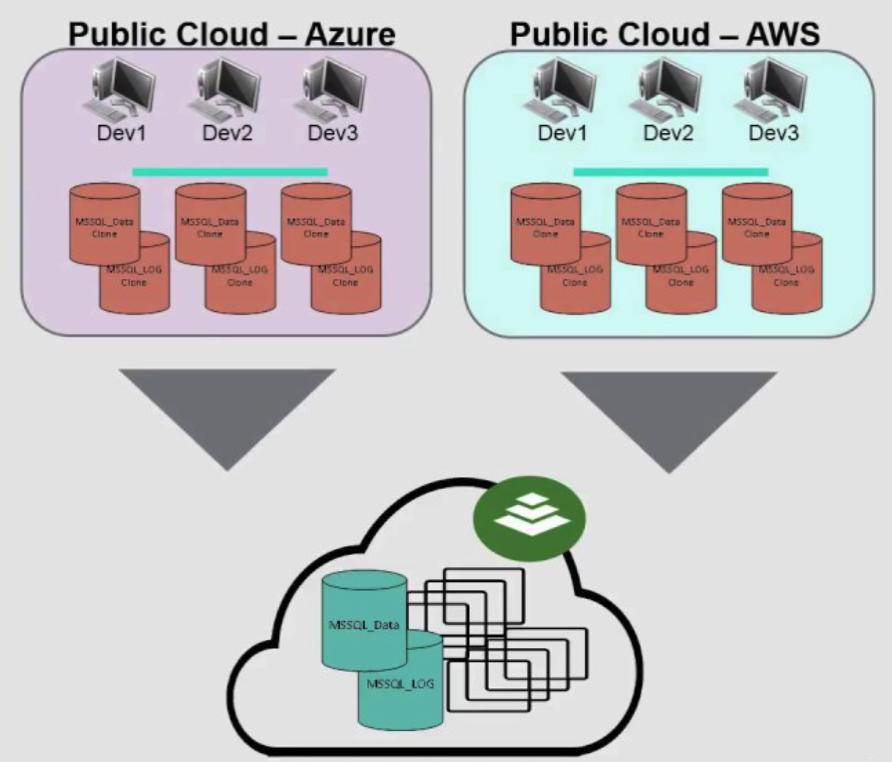

Under the hood there is an iSCSI network that brings the volumes directly to the virtual instance. Then the volume is kept under Nimble’s control to ensure IOPS, replication, snapshots,…

The interesting part of the show is the ability to lift and shift data logic across different instances or different cloud: using Nimble Storage on-premise and connecting to another cloud provider is possible to create interesting disaster recovery scenarios.

[vsw id=”qjQ2ucLVKBo” source=”youtube” width=”425″ height=”344″ autoplay=”no”]

Keep storage under control in hybrid environment is another desidered feature that in Cloud Volumes is availble to take the whole infrastructure under control: the integration with HPE Infosight (the software as a service solution able to “cut” the entire infrastructure to predict, find and react the major part of the issues in on-premise infrastructure) could be another important management peace to gain visibility in the cloud back-end.

Performances are another key point of this technology: the value of 30K IOPS brings this solution at the same level of the storage delivered for business critical applications.

My POV

This implementation, from a cloud consultant perspective could be a powerful enablement to build hybrid scenarios across cloud and datacenter. The marriage between the huge scaling opportunity offered by AWS or Azure, combine with the strongest data governance and replication brings this product in the same lane of the solutions for the business critical applications.

Finally, I hope to test this solution soon as possible and/or see a real case!