Objective 4.1: Configure Environment for Network Virtualization

Comprehend physical infrastructure configuration for NSX Compute, Edge and Management clusters (MTU, Dynamic Routing for Edge, etc.)

Physical infrastructure configuration:

- MTU: must be >=1600 (configure it at switch and vSwitch level). Note: quite all new switches uses have preconfigured jumbo frames (9000 bytes), check switch vendor documentation before configure virtual environment.

- Dynamic Routing: supported protocols are BGP, IS-IS and OSPF. Enable dynamic routing also on L3 switches, in order to establish dynamic routing adjacency with ESGs. See https://blog.linoproject.net/vcp6-nv-study-notes-section-2-understand-vmware-nsx-physical-infrastructure-requirements-part-1/

- Multicast:

- For hybrid replication mode, IGMP must be enabled on L2 switches involved in VXLAN connection. IGMP querier must be enabled on L3 switches or router connected between L2 switches.NTP Must be available (don’t deny NTP traffic)

- Forward and reverse DNS must be available

Prepare a Greenfield vSphere Infrastructure for NSX Deployment

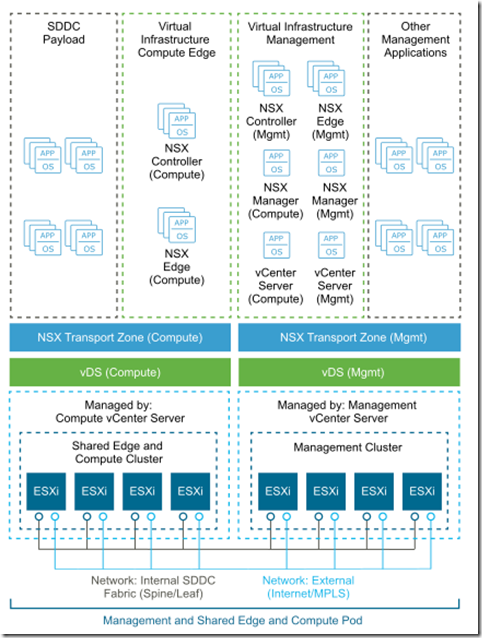

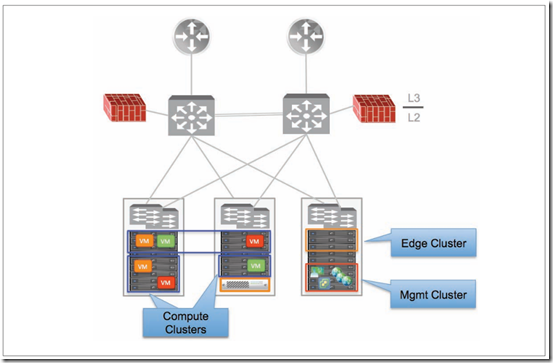

Cluster design:

- Management stack

- Holds NSX Manager, controllers and ESG and DLR control VMs

- Also provide vCenter components

- Could include other management workloads

- Compute/Edge stack

- Holds NSX controller cluster for compute stacks

- Holds NSX Edge service gateways and DLS control VM of the compute stacks

- SDDC Payload

- vSphere HA protects the NSX Manager instances by ensuring that the NSX Manager VM is restarted on a different host in the event of primary host failure.

- The NSX Controller nodes of the management stack run on the management cluster

- The data plane remains active during outages in the management and control planes although the provisioning and modification of virtual networks is impaired until those planes become available again.

- The NSX Edge service gateways and DLR control VMs of the compute stack are deployed on the shared edge and compute cluster. The NSX Edge service gateways and DLR control VMs of the management stack run on the management cluster.

- NSX Edge components that are deployed for north/south traffic are configured in equal-cost multi-path (ECMP) mode that supports route failover in seconds or utilize NSX HA for active/passive deployment.

Configure Quality of Service (QoS)

check here: https://blog.linoproject.net/vcp6-nv-study-notes-section-3-configure-and-manage-vsphere-networking-part-2/

Configure Link Aggregation Control Protocol (LACP)

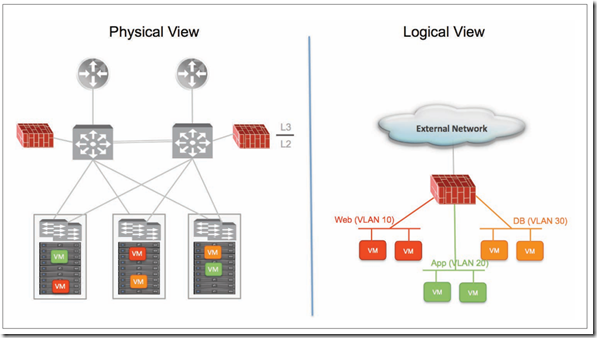

Configure a Brownfield vSphere Infrastructure for NSX

There are many deployment scenarios, anyway NSX is non disruptive technology that could be introduced with minimal physical/virtual configuration:

- NSX on top of the brownfield network infrastructure

- Scenario 1: Micro-segmentation without overlays

- Scenario 2: Micro-segmentation with Overlays:

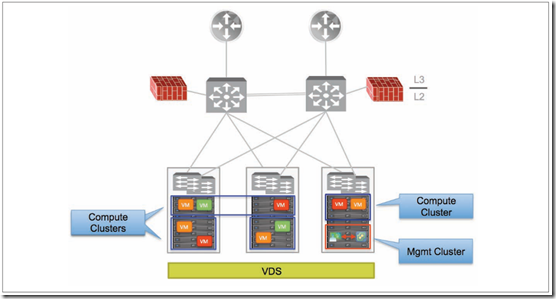

- ESXi hosts must be connected to the same distributed switch

- ESXi Hosts requirement

- Management cluster: other than NSX manager it’s necessary deploy controller cluster

- Compute cluster: reuse existing once or add new for VM connected to logical switches

- Edge cluster: it’s best practice deploy a new edge cluster for ESG and DLR control VMs

Check further information here: https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/whitepaper/products/nsx/vmware-nsx-brownfield-design-and-deployment-guide-white-paper.pdf

Determine how IP address assignments work in VMware NSX

Determine minimum permissions required to perform an NSX deployment task in a vSphere implementation

Client and User Access

- If you added ESXi hosts by name to the vSphere inventory, ensure that forward and reverse name resolution is working. Otherwise, NSX Manager cannot resolve the IP addresses.

- Permissions to add and power on virtual machines

- Access to the datastore where you store virtual machine files, and the account permissions to copy files to that datastore

- Cookies enabled on your Web browser, to access the NSX Manager user interface

- From NSX Manager, ensure port 443 is accessible from the ESXi host, the vCenter Server, and the NSX appliances to be deployed. This port is required to download the OVF file on the ESXi host for deployment.

- A Web browser that is supported for the version of vSphere Web Client you are using. See Using the vSphere Web Client in the vCenter Server and Host Management documentation for details.

Source: https://docs.vmware.com/en/VMware-NSX-for-vSphere/6.2/nsx_62_install.pdf

Objective 4.2: Deploy VMware NSX Components

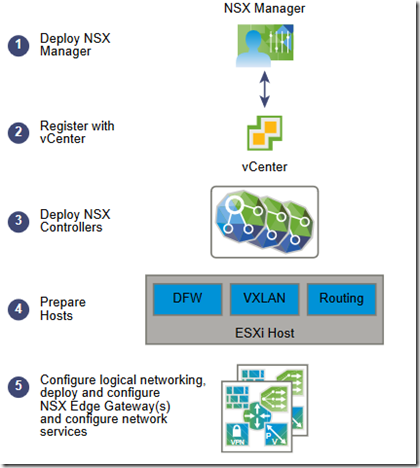

Workflow:

Install/Register NSX Manager

Procedure: Locate the NSX Manager Open Virtualization Appliance (OVA) file.

- In Firefox, open vCenter

- Select VMs and Templates, right-click your datacenter, and select Deploy OVF Template

- Paste the download URL or click Browse to select the file on your computer

- Tick the checkbox Accept extra configuration options -> allows to set ipv4 ivp6 addresses, default gw, DNS, NTP and SSH props during installation

- Accept VMware license

- Esit NSX manager name (if required)

- Select host or cluster on which deploy NSX manager VA

- Change disk dorma to thick provisioning and select destination datastore for VM configuration files and virtual disks

- Select port group for NSX manager

- Optionally join the customer experience improvement program (just check this box)

- Connect NSX Manager Appliance gui: https://appliance_ip_or_fqdn and login with admin and configured password

- Make sure that vPostgres, RabbitMQ and NSX management services are running

- Register vCenter manager (only 1 NSX manager with 1 vCenter and it’s necessary a vSphere user account with administrator role)

- Under Appliance Management, click Manage vCenter Registration

- Edit the vCenter Server element to point to the vCenter Server’s IP address or hostname, and enter the vCenter Server user name and password

- Check that the certificate thumbprint matches the certificate of the vCenter Server

- Do not tick Modify plugin script download location, unless the NSX Manager is behind a firewall type of masking device

- Confirm that the vCenter Server status is Connected –> If vCenter Web Client is already open, log out of vCenter and log back in with the same Administrator role used to register NSX Manager with vCenter. vCenter Web Client will not display the Networking & Security icon on the Home tab

- Register SSO (NTP server must be specified in order to have SSO server and NSX Manager time in sync)

- Click the Manage tab, then click NSX Management Service

- Type the name or IP address of the host that has the lookup service

- Type the port number –> 443 for vSphere >= 6.0 and 7444 for vSphere 5.5

- Check that the certificate thumb print matches the certificate of the vCenter Server

- Confirm that the Lookup Service status is Connected

- Assign a role to the SSO user

Prepare ESXi hosts

Prerequisites:

- Register vCenter with NSX Manager and deploy NSX controllers.

- Verify that DNS reverse lookup returns a fully qualified domain name when queried with the IP address of NSX Manager

- Verify that hosts can resolve the DNS name of vCenter server

- Verify that hosts can connect to vCenter Server on port 80

- Verify that the network time on vCenter Server and ESXi hosts is synchronized

- For each host cluster that will participate in NSX, verify that hosts within the cluster are attached to a common VDS

- If you have vSphere Update Manager (VUM) in your environment, you must disable it before preparing clusters for network virtualization

- Before beginning the NSX host preparation process, always make sure that the cluster is in the resolved state—meaning that the Resolve option does not appear in the cluster’s Actions list

Procedure:

- In vCenter, navigate to Home > Networking & Security > Installation and select the Host Preparation tab

- For all clusters that will require NSX logical switching, routing, and firewalls, click the gear icon and click Install

- Monitor the installation until the Installation Status column displays a green check mark

VIBs are installed and registered with all hosts within the prepared cluster:

- esx-vsip

- esx-vxlan

Verify with esxcli software vib list | grep esx

Note: After host preparation, a host reboot is not required; but if you move a host to an unprepared cluster, the NSX VIBs automatically get uninstalled from the host. In this case, a host reboot is required to complete the uninstall process.

Source: https://docs.vmware.com/en/VMware-NSX-for-vSphere/6.2/nsx_62_install.pdf

Deploy NSX Controllers

Procedure:

- In vCenter, navigate to Home > Networking & Security > Installation and select the Management

- In the NSX Controller nodes section, click the Add Node

- Enter the NSX Controller settings appropriate to your environment.

- If you have not already configured an IP pool for your controller cluster, configure one now by clicking New IP Pool

- Type and re-type a password for the controller. –> Password must not contain the username as a substring. Any character must not consecutively repeat 3 or more times.

- After the first controller is completely deployed, deploy two additional controllers.

Source: https://docs.vmware.com/en/VMware-NSX-for-vSphere/6.2/nsx_62_install.pdf

Understand assignment of Segment ID Pool and appropriate need for Multicast addresses

VXLAN segments are built between VXLAN tunnel end points (VTEPs). A hypervisor host is an example of a typical VTEP. Each VXLAN tunnel has a segment ID. –> You must specify a segment ID pool for each NSX Manager to isolate your network traffic.

Prerequisites:

- determining the size of each segment ID pool, keep in mind that the segment ID range controls the number of logical switches that can be created –> Choose a small subset of the 16 million potential VNIs. You should not configure more than 10,000 VNIs in a single vCenter because vCenter limits the number of dvPortgroups to 10,000

- If VXLAN is in place in another NSX deployment, consider which VNIs are already in use and avoid overlapping VNIs

Procedure:

- In vCenter, navigate to Home > Networking & Security > Installation and select the Logical Network Preparation tab

- Click Segment ID > Edit

- Type a range for segment IDs, such as 5000-5999

- If any of your transport zones will use multicast or hybrid replication mode, add a multicast address or a range of multicast addresses.When VXLAN multicast and hybrid replication modes are configured and working correctly, a copy of multicast traffic is delivered only to hosts that have sent IGMP join messages. To avoid such flooding, you must do the following:

- Make sure that the underlying physical switch is configured with an MTU larger than or equal to 1600

- Make sure that the underlying physical switch is correctly configured with IGMP snooping and an IGMP querier in network segments that carry VTEP traffic

- Make sure that the transport zone is configured with the recommended multicast address range. The recommended multicast address range starts at 239.0.1.0/24 and excludes 239.128.0.0/24. Do not use 239.0.0.0/24 or 239.128.0.0/24 as the multicast address range, because these networks are used for local subnet control, meaning that the physical switches flood all traffic that uses these addresses

Source: https://docs.vmware.com/en/VMware-NSX-for-vSphere/6.2/nsx_62_install.pdf

Install vShield Endpoint (Guest Introspection)

Prerequisites:

- A datacenter with supported versions of vCenter Server and ESXi installed on each host in the cluster

- If the hosts in your clusters were upgraded from vCenter Server version 5.0 to 5.5, you must open ports 80 and 443 on those hosts

- Hosts in the cluster where you want to install Guest Introspection have been prepared for NSX –> check Host preparation

- NSX Manager 6.2 installed and running

- Ensure the NSX Manager and the prepared hosts that will run Guest Introspection services are linked to the same NTP server and that time is synchronized

Procedure:

- On the Installation tab, click Service Deployments

- Click the New Service Deployment

- In the Deploy Network and Security Services dialog box, select Guest Introspection

- In Specify schedule (at the bottom of the dialog box), select Deploy now to deploy Guest. Click Next

- Select the datacenter and cluster(s) where you want to install Guest Introspection, and click Next

- On the Select storage and Management Network Page, select the datastore on which to add the service virtual machines storage or select Specified on host

- Select the distributed virtual port group to host the management interface

- In IP assignment, select one of the following:

- DHCP

- An ip pool

- Click Next and then click Finish on the Ready to complete page

- Monitor the deployment until the Installation Status column displays Succeeded

- If the Installation Status column displays Failed, click the icon next to Failed

Source: https://docs.vmware.com/en/VMware-NSX-for-vSphere/6.2/nsx_62_install.pdf

Create an IP pool and Understand when to use IP Pools versus DHCP for NSX Controller Deployment

Source: https://docs.vmware.com/en/VMware-NSX-for-vSphere/6.2/nsx_62_install.pdf