Objective 1.1: Compare and Contrast the Benefits of a VMware NSX Implementation

Determine challenges with physical network implementations

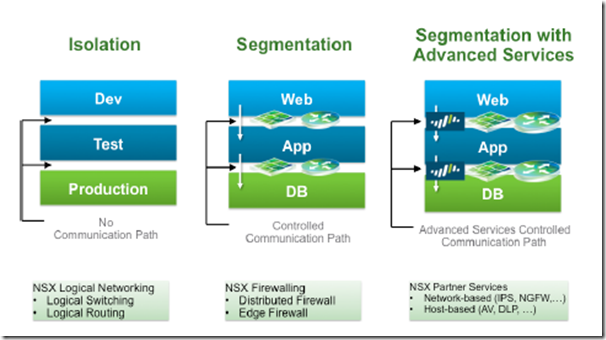

- Network micro-segmentation wraps security controls around much smaller groups of resources (often down to a small group of virtualized resources or individual virtual machines).

- Micro-segmentation has been understood to be a best practice approach from a security perspective, but has been difficult to apply in traditional environments.

- The perimeter-centric network security strategy for enterprise data centers has proven to be inadequate. Modern attacks exploit this perimeter-only defense, moving laterally within the data center from workload to workload with little or no controls to block their propagation

- Using the traditional firewall approach to achieve micro-segmentation quickly reaches two key operational barriers; throughput capacity and operations management –> The capacity issue can be overcome at a cost. It is possible to buy enough physical or virtual firewalls to deliver the capacity required to achieve micro-segmentation

- However, operations increase exponentially with the number of workloads and the increasingly dynamic nature of today’s data centers. (If firewall rules need to be manually added, deleted or modified every time a new virtual machine is added, moved or decommissioned, the rate of change quickly overwhelms IT operations)

- Network provisioning is slow: The current operational model has resulted in slow, manual, error-prone provisioning of network services to support application deployment (use of terminal, keyboard, scripting, CLI,…) –> Complexity and risk are further compounded by the need to ensure that changes to the network for one application do not adversely impact other applications

- Workload placement and mobility is limited: The current device-centric approach to networking confines workload mobility to individual physical subnets and availability zones. In order to reach available compute resources in the data center, network operators are forced to perform manual box-by-box configuration of VLANs, ACLs, firewall rules, and so forth –> process is not only slow and complex, but also one that will eventually reach configuration limits (e.g., 4096 for total VLANs).

- Additional Data Center Networking Challenges:

- VLAN sprawl caused by constantly having to overcome IP addressing and physical topology limitations required to logically group sets of resources

- Firewall rule sprawl resulting from centralized firewalls deployed in increasingly dynamic environments coupled with the common practice of adding new rules but rarely removing any for fear of disrupting service availability

- Performance choke points and increased network capacity costs due to the need for hair-pinning and multiple hops to route traffic through essential network services that are not pervasively available. The increase of East-West traffic in a data center exacerbates this problem

- Security and network service blind spots that result in choosing to avoid hair-pinning and other deploy risky routing schemes

- Increased complexity in supporting the dynamic nature of today’s cloud data center environments.

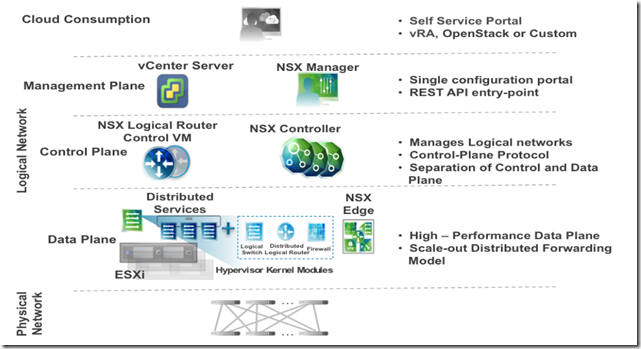

Understand common VMware NSX terms

- Physical Network

- Physical Switch, routers, firewall, etc

- Logical network

- Data Plane:

- Distributed Services

- Distributed Switch (vDS)

- Logical Switch

- Distributed Logical Router (DLR)

- Firewall (DFW)

- NSX Edge

- Distributed Services

- Control Plane

- NSX DLR Control VM

- NSX Controller

- Management Plane

- vCenter Server

- NSX Manager

- Cloud Consumption (vRA, Openstack, Custom Portal, implementation API)

- Data Plane:

Differentiate NSX network and security functions and services

- NSX Network functions

- Switching: enables extension of L2 segment in the fabric independent of physical network design

- ECMP (Equal cost multipath routing) and DLR (Routing in general): routing between ip subnets can be done without leaving hypervisor with minimal CPU/memory host overhead. DLR provide optimal E-W communication and Edge provides N-S communication (external networks included) with ECMP based routing.

- Connectivity to Physical Networks: L2-L3 gateway functions to provide communication between workload deployed in logical and physical space

- NSX Service Platform:These services are either a built-in part of NSX or available from 3rd party vendors

- Networking and Edge Services

- Firewalling: Edge firewall is a part of ESG (NSX Edge Services) –> it provides perimeter firewall that can be used is addiction of physical firewall. It is helpful to developing

- PCI zones

- Multi-tenant environments

- dev-ops style connectivity without forcing physical network

- VPN: enable L2-L3 VPN to interconnect remote datacenter and users access:

- L2VPN

- IPSEC VPN

- SSL VPN

- Data Security

- Load balancing

- Firewalling: Edge firewall is a part of ESG (NSX Edge Services) –> it provides perimeter firewall that can be used is addiction of physical firewall. It is helpful to developing

- Security Services

- DFW for L2-L4 traffic (is done directly at the kernel and vNIC level). NSX has also an extensible framework allowing security vendor to implement 3rd party software (such as L7 protection , IDS/IPS).

- Edge Firewalling

- SpoofGuard for validation:

- Networking and Edge Services

Differentiate common use cases for VMware NSX

NSX has been deployed in full production, at scale, by several of the largest cloud service providers, global financials and enterprise data centers in the world. Typical use cases include:

- Data Center Automation:

- rapid application deployment with automated network provisioning in lock-step with compute and storage provisioning

- quick and easy insertion for both virtual and physical services

- Data Center Simplification:

- freedom from VLAN sprawl, firewall rule sprawl, and convoluted traffic patterns

- isolated development, test, and production environments all operating on the same physical infrastructure

- Data Center Enhancement

- fully distributed security and network services, with centralized administration

- push-button, no-compromise disaster recovery / business continuity

- Multi-tenant Clouds

- automated network provisioning for tenants while enabling complete customization and isolation

- maximized hardware sharing across tenants (and physical sites)

Objective 1.2: Understand VMware NSX Architecture

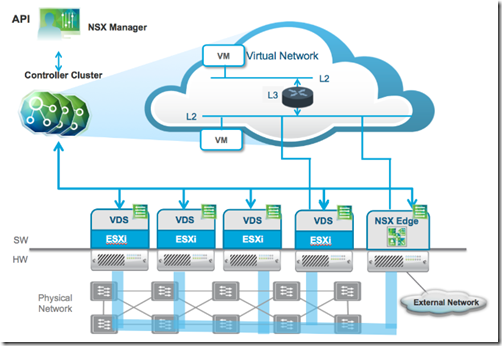

Differentiate component functionality of NSX stack infrastructure components

NSX logical networks leverage two types of access layer entities –the hypervisor access layer and the gateway access layer. The hypervisor access layer represents the point of attachment to the logical networks for virtual endpoints. The gateway access layer provides L2 and L3 connectivity into the logical pace for devices deployed in the physical network infrastructure.

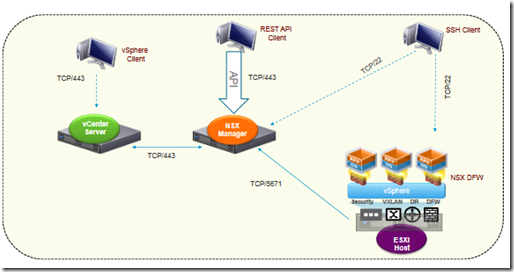

- NSX Manager

- Management plane VA –> it serve as the entry point for REST API for NSX

- It is tightly connected with vCenter server –> 1:1 relationship

- Provides networking and security plugin for vCenter Web UI to control NSX functionality

- Responsible of the deployment of controller cluster and ESXi host preparation

- Responsible of deployment and configuration of NSX Edge gateways and associates services

- Ensures security control plane communication of NSX architecture (creates self-signed certificate for nodes of control cluster and secure all NSX components using certificate mutual authentication)

- SSL is disable by default in NSX 6.0 and enabled by default in 6.1

- NSX is a VA and is enough protected using vSphere HA and dynamically moved across hosts using vMotion/DRS

- NSX outage may affect only

- identity based firewall

- flow monitoring collection

- NSX Manager data could be backed up using on-demand backup functionality (NSX Manager UI) or scheduling a periodic backup. Note: Restore is allowed only from fresh NSX deployment.

- NSX Manager requirements:

- v 6.1 –> 4vCPU, 12 GB RAM and 60GB OS disk

- v 6.2 default –> 4vCPU, 16 GB RAM and 60GB OS disk

- v 6.2 large deployment –> 8vCPU, 24 GB RAM and 60GB OS disk

- Controller Cluster

- is the control plane component responsible for managing the hypervisor switching and routing modules

- consists of controller nodes that manage specific logical switches

- The use of controller cluster in managing VXLAN based logical switches eliminates the need for multicast configuration at the physical layer for VXLAN overlay

- supports an ARP suppression mechanism, reducing the need to flood ARP broadcast requests across an L2 network domain where virtual machines are connected

- For resiliency and performance, production deployments of controller VM should be in three distinct hosts and represents a scale-out distributed system, where each controller node is assigned a set of roles that define the type of tasks the node can implement

- In order to increase the scalability characteristics of the NSX architecture, a slicing mechanism is utilized to ensure that all the controller nodes can be active at any given time

- In the case of failure of a controller node, the slices owned by that node are reassigned to the remaining members of the cluster –> The election of the master for each role requires a majority vote of all active and inactive nodes in the cluster –> controller cluster must be deployed with an odd number of nodes

- NSX controller nodes are deployed as virtual appliances (the deployment is handled by NSX Manager)+

- Each NSX deployment requires: min 3 nodes with 4 vCPU (with 2048 Mhz reserved, 4 GB RAM and 20 GB OS) each and should be connected each other and with NSX Manager using 3 distinct ip addresses.

- It is recommended to spread the deployment of cluster nodes across separate ESXi hosts –> in DRS use anti-affinity rules to avoid the deployment of more than one controller node into the same host

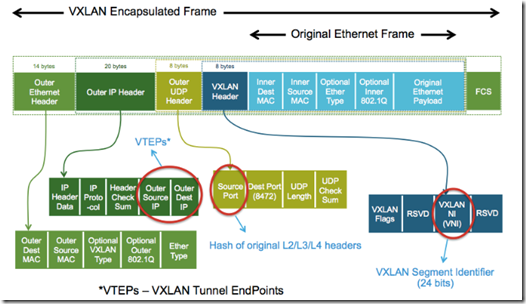

- VXLAN Primer

- capabilities in decoupling connectivity in the logical space from the physical network infrastructure

- The physical network effectively becomes a backplane used to transport overlay traffic

- Solves many challenges:

- Agile/Rapid Application Deployment –> Overhead required to provision the network infrastructure in support of a new application often is counted in days if not weeks

- Workload Mobility –> In traditional data center designs, this requires extension of L2 domains (VLANs) across the entire data center network infrastructure. This affects the overall network scalability and potentially jeopardizes the resiliency of the design

- Large Scale Multi-Tenancy –> The use of VLANs as a means of creating isolated networks limits the maximum number of tenants that can be supported (4094 VLAN) –> it becomes a bottleneck for many cloud providers

- Virtual Extensible LAN (VXLAN) has become the “de-facto” standard overlay technology and is embraced by multiple vendors

- VXLAN Specification (coming soon)

- ESXi Hypervisor with VDS

- VDS is now available on all VMware ESXi hypervisors, so its control and data plane interactions are central to the entire NSX architecture

- See VDS vSwitch and best practice

- User Space consists of software components that provide the control plane communication path to the NSX manager and the controller cluster nodes

- vsfwd

- netcpa(UWA)

- Kernel Space is composed by

- DFW

- VDS

- VXLAN

- Routing

- NSX Edge Services Gateway

- NSX Edge is a multi-function, multi-use VM appliance for network virtualization

- Edge VM supports two distinct modes of operation:

- Active-Standby

- ECMP Mode (up to 8 Edge VM and up to 80GB traffic per DLR

- Routing: provides centralized on-ramp/off-ramp routing between the logical networks deployed in the NSX domain and the external physical network infrastructure. Supports various dynamic routing protocols such as OSPF, iBGP, eBGP. Supports:

- active-standby

- ECMP

- NAT

- Firewall

- Load Balancing

- L2 and L3 VPNs

- DHCP server , DNS relay and IP Address Management (DDI). NSX 6.1 introduce DHCP Relay

- Is deployed in the following form:

- X-Large (6 vCPU, 8GB RAM)

- Quad-Large (4 vCPU, 1 GB RAM)

- Large (2 vCPU, 1 GB RAM)

- Compact (1 vCPU, 512 MB RAM)

- Transport Zone

- defines a collection of ESXi hosts that can communicate with each other across a physical network infrastructure. This communication happens over one or more interfaces defined as VXLAN Tunnel Endpoints (VTEPs)

- extends across one or more ESXi clusters and commonly defines a span of logical switches

- DFW

- Provides a L2-L4 stateful fw servicesto any workload in NSX environment

- DFW is activated as soon as the host preparation process is completed (if a VM does not require DFW service, it can be added in the exclusion list)

- By default, NSX Manager, NSX Controllers,and Edge services gateways are automatically excluded from DFW function

- The DFW system architecture is based on 3 distinct entities,each with a clearly defined role

- DFW Mechanism (Coming soon)

Compare and contrast with advantages/disadvantages of topologies (star, ring, etc.) as well as scaling limitations

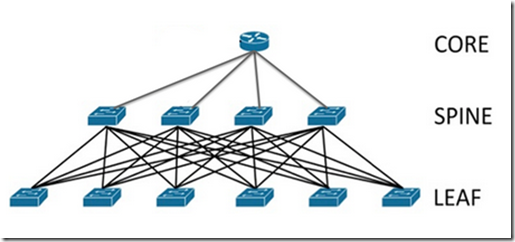

Logical networks implemented by NSX using VXLAN overlay technology can be deployed over common data center network topologies. This section of the design guide addresses requirements for the physical network and examines design considerations for deploying NSX network virtualization solution.

The NSX enables a multicast free, controller based VXLAN overlay network. Its extreme flexibility enables it to work with:

- Any type of physical topology – PoD, routed, or spine-leaf

- Any vendor physical switch

- Any install base with any topology – L2, L3, or a converged topology mixture

- Any fabric where proprietary controls or interaction does not requires specific considerations

The only 2 requirements are:

- IP connectivity

- jumbo MTU support (VXLAN adds 50 Bytes to each original frame –> leading MTU size at least 1600 Bytes).

Physical topologies:

- Star

- each network host/switch is connected to a central switch with a point-to-point connection (central node with peripheral node called also “spoke” node)

- Advantages

- If one node or its connection breaks it doesn’t affect the other nodes and connections.

- Node can be added or removed without disturbing the network

- Disadvantages

- An expensive network layout to install because of the amount of cables needed

- Central switch is a single point of failure

- Source: https://en.wikipedia.org/wiki/Star_network

- Ring

- each node connects to exactly two other nodes, forming a single continuous pathway for signals through each node

- Rings can be unidirectional, with all traffic travelling either clockwise or anticlockwise around the ring, or bidirectional

- Advantages

- Performs better than a bus technology because load is balanced between nodes (best under heavy network load)

- Does not require a central node to manage the connectivity between nodes

- Point to point line configuration makes it easy to identify and isolate faults

- Disadvantages

- One malfunctioning node can create problems for the entire network

- Moving, adding and changing the nodes can affect the network

- Communication delay is directly proportional to number of nodes in the network

- Bandwidth is shared on all links between nodes

- Adding node requires ring shutdown and reconfiguration

- Source: https://en.wikipedia.org/wiki/Ring_network

- Mesh

- A mesh network is a local network topology in which the infrastructure nodes (i.e. bridges, switches and other infrastructure devices) connect directly, dynamically and non-hierarchically to as many other nodes as possible and cooperate with one another to efficiently route data from/to clients

- This lack of dependency on one node allows for every node to participate in the relay of information

- Mesh networks dynamically self-organize and self-configure, which can reduce installation overhead

- Advantages

- The ability to self-configure enables dynamic distribution of workloads, particularly in the event that a few nodes should fail. This in turn contributes to fault-tolerance and reduced maintenance costs.

- Disadvantages

- While star and ring are quite vendor neutral between nodes (because they speak a standard language), the mesh networks requires more vendor dependency due to interoperability that sometimes is not fully adopted by all switch vendors.

- Source: https://en.wikipedia.org/wiki/Mesh_networking

- Leaf-Spine

- Leaf-spine is a two-layer network topology composed of leaf switches and spine switches.

- The topology is composed of leaf switches (to which servers and storage connect) and spine switches (to which leaf switches connect). Leaf switches mesh into the spine, forming the access layer that delivers network connection points for servers

- Can be layer 2 or layer 3 depending on the type of switch utilized to realize the deployment (the packet could be switched or routed)

- Advantages:

- Latency and bottleneck minimized because each payload travels to a spine switch and other leaf switch.

- Source: http://searchdatacenter.techtarget.com/definition/Leaf-spine

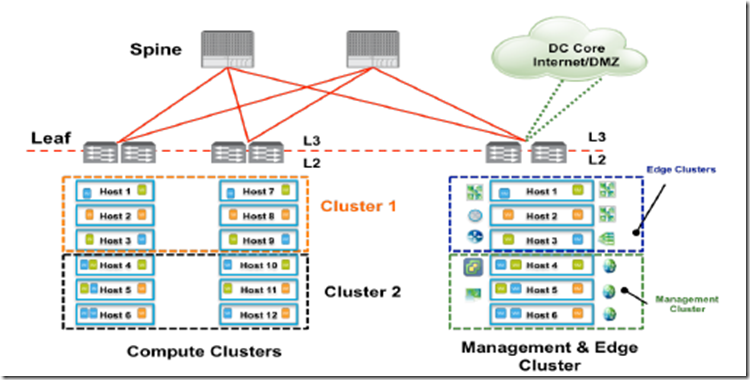

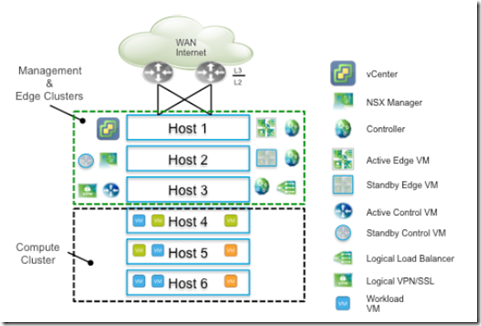

Compare and contrast VMware NSX data center deployment models

- Small design: A small-scale design can start with single cluster having few ESXi hosts, consisting of management, compute, and edge components along with the respective workloads. This is commonly called a “datacenter in a rack” design and consists of pair of network devices directly connected to WAN or Internet servicing few applications.

- Medium design: Medium size design considers the possibility of future growth. It assumes more than one cluster, so a decision is required whether any clusters can be mixed while optimally utilizing underlying resources.

- The design considerations for medium size deployment are as follows:

- The standard edition vSphere license is assumed which now supports VDS for NSX deployments only.

- The Edge gateway can serve holistic need of medium business – load balancer, integrated VPN, SSL client access, and firewall services

- Bandwidth requirements will vary though are typically not greater than 10GB. Edge VM can be active-standby automatically deployed with automatic anti-affinity in two different hosts for availability. The large size (2 vCPU) of Edge VM is recommended and can grow to quad-large (4 vCPU) if line rate throughput is desired

- If throughput greater than 10 GB is desired, convert the active-standby gateway to ECMP mode with the same vCPU requirement, however stateful services cannot be enabled. Instead, enable the load balancer service or VPN on an Edge in the compute cluster and use DFW with micro-segmentation.

- Active DLR control VM should not be hosted where the active Edge services gateway is running for ECMP based design or reevaluate DRS and anti-affinity rules when Edge VM is converted from active-standby to ECPM mode. And consider tuning routing peer for faster convergence

- Resource reservation is a key to maintain SLA of CPU reservation for NSX components, specifically the Edge services gateway

- Recommendations for separate management, compute, and edge should be considered for the following conditions:

- Future hosts growth

- Multi-site expansion

- Multiple vCenter management

- Use of SRM

- Large scale design: Large-scale deployment has distinct characteristics that mandate separation of clusters, diversified rack/physical layout, and consideration that is beyond the scope of this document.

Prepare a vSphere implementation for NSX

Design consideration for NSX Security services:

- Three Pillars of Micro-Segmentation:

- Prepare Host in Compute and Edge Clusters:

- Datacenter designs generally involve 3 different types of clusters – management, compute and edge. VDI deployments may also have desktop clusters. Compute,desktop and edge clusters must be prepared for the distributed firewall.

- The management cluster enables operational tools, management tools, and security management tools to communicate effectively with all guest VMs

- A separation of security policy between management components and workload is desired

- The management cluster can be prepared for security VIB in small environment where edge and management clusters can be combined

- Account for vMotion of guest VMs in DC:

- Preparing the hosts does not only involve the clusters/hosts that are currently running workloads

- vMotion Across Hosts in the Same Cluster (vSphere 5.x, 6.0): Ensure that when new hosts are added to a cluster, they are monitored for VIB deployment.

- vMotion Across Clusters (vSphere 5.x, 6.0): Ensure that clusters which are part of vMotion in the same vSphere datacenter construct for workloads are also prepared for distributed firewall

- vMotion Across vCenters. (vSphere 6.0 only): With vSphere 6.0, it is possible to vMotion guest VMs across vCenter domains

- Enable Specific IP Discovery Mechanisms for Guest VMs:

- NSX allows distributed firewall rules to be written in terms of grouping objects that evaluate to virtual machines rather than IP addresses

- Enable at least one of these IP discovery mechanisms:

- Deploy VMtools in guest VM.

- Enable DHCP snooping (NSX 6.2.1 and above)

- Enable ARP snooping (NSX 6.2.1 and above)

- Manually authorize IP addresses for each VM

- Enable trust on first use of IP address for a VM.