Workload balancing is another vSphere feature that using vMotion and sVmotion increase the system efficiency and in some cases could be a cost savings solution.

Sources:

- https://pubs.vmware.com/vsphere-60/index.jsp#com.vmware.vsphere.resmgmt.doc/GUID-98BD5A8A-260A-494F-BAAE-74781F5C4B87.html

- https://blogs.vmware.com/vsphere/2016/05/load-balancing-vsphere-clusters-with-drs.html (thx to Matthew Meyer)

- https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1007485

Resource management

Resource type are:

- CPU

- Memory

- Power

- Storage

- Network

Resource providers are:

- Host

- Clusters

- Datastore cluster

Resource consumers are VMs. Goals of resource management are:

- Performance isolation

- Efficient Utilization

- Easy administration

DRS

A cluster is a collection of ESXi hosts and associated virtual machines with shared resources and a shared management interface. Before you can obtain the benefits of cluster-level resource management you must create a cluster and enable DRS.

Admission control and initial placement

When you attempt to power on a single virtual machine or a group of virtual machines in a DRS-enabled cluster, vCenter Server performs admission control. It checks that there are enough resources in the cluster to support the virtual machine(s).

If DRS is set to automatic, automatically executes the placement recommendation. Otherwise displays the placement recommendation. With DRS cluster is possible to execute:

- single virtual machine power on: can power on a single virtual machine and receive initial placement recommendations

- group power on: the initial placement recommendations for group power-on attempts are provided on a per-cluster basis

VM migration

Although DRS performs initial placements so that load is balanced across the cluster, changes in virtual machine load and resource availability can cause the cluster to become unbalanced. To correct such imbalances, DRS generates migration recommendations.

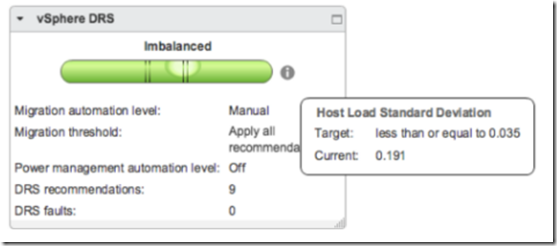

The DRS migration threshold allows you to specify which recommendations are generated and then applied (when the virtual machines involved in the recommendation are in fully automated mode) or shown (if in manual mode). This threshold is also a measure of how much cluster imbalance across host (CPU and memory) loads is acceptable.

It is possible to set threshold mode from 1 (more conservative) to 5 (more aggressive).A priority level for each migration recommendation is computed using the load imbalance metric of the cluster. This metric is displayed as Current Host Load Standard Deviation in the cluster’s Summary tab in the vSphere Web Client.

Looking to this KB https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1007485, it is possible to calculate priority level of a DRS migration recommendation:

6 – ceiling(LoadImbalanceMetric / 0.1 * sqrt(NumberOfHostsInCluster))

Current host load is calculated bringing two type of metrics:

- Memory (active)

- CPU

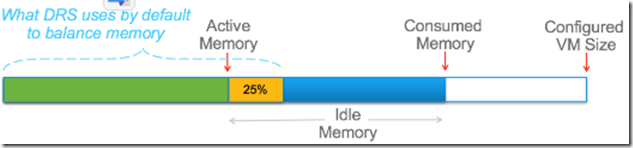

Consumed memory is the maximum amount of memory used by the VM at any point in its lifetime, even if the VM is not actually using most of this memory. Active memory is an estimation of the amount of memory that is currently actively being used by the VM; it is estimated using a memory sampling algorithm which is run every 5 minutes.

DRS uses active memory when calculating host local (Active Memory) + (25% of Idle Memory)

Starting with vSphere 5.5, an advanced setting was introduced called PercentIdleMBInMemDemand that can be used to change this behavior.

AggressiveCPUActive is another setting that was first introduced in vSphere 5.5 and is intended to improve the CPU load balance in environments where the CPU is very active but spikey in nature. If enabled DRS will switch from using the average to the 80th percentile (i.e., the 2nd highest value in the interval). This will help some situation where DRS is not generating recommendations to move VM based on CPU demand because the average demand in the past 5-minute period is much lower than needed to generate the recommendation.

DRS Cluster Requirements:

- Shared storage (SAN and NAS)

- Shared VMFS:

- Place the disks of all virtual machines on VMFS volumes that are accessible by source and destination hosts

- VMFS capacity sufficiently large to store all virtual disks for your virtual machines

- VMFS volumes on source and destination hosts use volume names, and all virtual machines use those volume names for specifying the virtual disks

- Processor

- To avoid limiting the capabilities of DRS, you should maximize the processor compatibility of source and destination hosts in the cluster.

- Use EVC to help ensure vMotion compatibility for the hosts in a cluster

- Use CPU compatibility masks for individual virtual machines (you can hide certain CPU features from the virtual machine and potentially prevent migrations with vMotion from failing due to incompatible CPUs)

- vMotion

- does not support RDM and MSCS clustered applications

- private Gbe network between hosts.

- vFlash (optional)

- DRS can manage virtual machines that have virtual flash reservations (DRS selects a host that has sufficient available virtual flash capacity to start the virtual machine)

DRS use cluster resource pool tree to handle workloads across the hosts. It’s possible to restore resource pool tree snapshot if:

- DRS is turned ON

- Only in same cluster where snapshot is taken

- No other resource pool must be present

The vSphere Web Client indicates whether a DRS cluster is valid, overcommitted (yellow), or invalid (red). Yellow or red case happens due several reasons:

- A cluster might become overcommitted if a host fails

- A cluster becomes invalid if vCenter Server is unavailable and you power on virtual machines using the vSphere Web Client

- A cluster becomes invalid if the user reduces the reservation on a parent resource pool while a virtual machine is in the process of failing over

- If changes are made to hosts or virtual machines using the vSphere Web Client while vCenter Server is unavailable, those changes take effect. When vCenter Server becomes available again, you might find that clusters have turned red or yellow because cluster requirements are no longer met.

Power Resources

The vSphere Distributed Power Management (DPM) feature allows a DRS cluster to reduce its power consumption by powering hosts on and off based on cluster resource utilization. vSphere DPM can use one of three power management protocols to bring a host out of standby mode:

- Intelligent Platform Management Interface (IPMI),

- Hewlett-Packard Integrated Lights-Out (iLO)

- Wake-On-LAN (WOL)

It is possible to use event-based alarms in vCenter Server to monitor vSphere DPM:

- Event DrsEnteringStandbyModeEvent: Entering Standby mode (about to power off host)

- DrsEnteredStandbyModeEvent: Successfully entered Standby mode (host power off succeeded)

- DrsExitingStandbyModeEvent: Exiting Standby mode (about to power on the host)

- DrsExitedStandbyModeEvent: Successfully exited Standby mode (power on succeeded)

DRS affinity Rules

To control the placement of VMs it is possible create 2 type of rules:

- VM-Host affinity Rules

- VM-VM Affinity Rules

With VM-VM Affinity rules it is possible to specify whether selected individual virtual machines should run on the same host or be kept on separate hosts. If two VM-VM affinity rules are in conflict, you cannot enable both. When two VM-VM affinity rules conflict, the older one takes precedence and the newer rule is disabled.

A VM-Host affinity rule specifies whether or not the members of a selected virtual machine DRS group can run on the members of a specific host DRS group.

When you create a VM-Host affinity rule, its ability to function in relation to other rules is not checked. So it is possible for you to create a rule that conflicts with the other rules you are using. A number of cluster functions are not performed if doing so would violate a required affinity rule:

- DRS does not evacuate virtual machines to place a host in maintenance mode.

- DRS does not place virtual machines for power-on or load balance virtual machines

- vSphere HA does not perform failovers

- vSphere DPM does not optimize power management by placing hosts into standby mode

sDRS

A datastore cluster is a collection of datastores with shared resources and a shared management interface; it is possible to use vSphere Storage DRS to manage storage resources.

As with clusters of hosts, you use datastore clusters to aggregate storage resources, which enables you to support resource allocation policies at the datastore cluster level. The following resource management capabilities are also available per datastore cluster:

- Space utilization load balancing

- I/O latency load balancing

- Anti-affinity rules

Storage DRS provides initial placement and ongoing balancing recommendations to datastores in a Storage DRS-enabled datastore cluster. Recommendations are made in accordance with space constraints and with respect to the goals of space and I/O load balancing.

vCenter Server displays migration recommendations on the Storage DRS Recommendations page for datastore clusters that have manual automation mode. Each recommendation includes the virtual machine name, the virtual disk name, the name of the datastore cluster, the source datastore, the destination datastore, and a reason for the recommendation:

- Balance datastore space use

- Balance datastore I/O load

Mandatory recommendations are made in the following situations:

- datastore is out of space

- Anti-affinity or affinity rules are being violated

- The datastore is entering maintenance mode and must be evacuated

Like DRS, sDRS must have no automation (manual mode), partially and full automation. In the vSphere Web Client, you can use the following thresholds to set the aggressiveness level for Storage DRS:

- Space utilization

- I/O latency

In advanced option is possible to make more aggressiveness level:

- space utilization difference (between datastore)

- I/O load balancing invocation intervall

- I/O imbalance threshold

Requirements:

- Datastore clusters must contain similar or interchangeable datastores. A datastore cluster can contain a mix of datastores with different sizes and I/O capacities, and can be from different arrays and vendors. However, the following types of datastores CANNOT coexist in a datastore cluster:

- NFS and VMFS datastores cannot be combined in the same datastore cluster

- Replicated datastores cannot be combined with non-replicated datastores in the same Storage-DRS-enabled datastore cluster

- ESXi Host >= 5.0

- Datastores shared across multiple data centers cannot be included in a datastore cluster

- Best Practice: do not include datastores that have hardware acceleration enabled in the same datastore cluster as datastores that do not have hardware acceleration enabled.

Requirements place datastore in maintenance mode:

- sDRS enabled

- No CDROM image file stored

- at lease 2 datastore in datastore cluster

Storage DRS affinity or anti-affinity rules might prevent a datastore from entering maintenance mode. When you enable the Ignore Affinity Rules for Maintenance option for a datastore cluster, vCenter Server ignores Storage DRS affinity and anti-affinity rules that prevent a datastore from entering maintenance mode. Advanced Option –> Add –> IgnoreAffinityRulesForMaintenance –> 1(Enabled) / 0 (Disabled)

You can override the datastore cluster-wide automation level for individual virtual machines. You can also override default virtual disk affinity rules.

You can create a scheduled task to change Storage DRS settings for a datastore cluster so that migrations for fully automated datastore clusters are more likely to occur during off-peak hours using sDRS Scheduling option under DRS.

You can create Storage DRS anti-affinity rules to control which virtual disks should not be placed on the same datastore within a datastore cluster. By default, a virtual machine’s virtual disks are kept together on the same datastore. When you move virtual disk files into a datastore cluster that has existing affinity and anti-affinity rules, the following behavior applies:

- Inter-VM Anti-Affinity Rules: Specify which virtual machines should never be kept on the same datastore

- Intra-VM Anti-Affinity Rules: Specify which virtual disks associated with a particular virtual machine must be kept on different datastores

To diagnose problems with Storage DRS, you can clear Storage DRS statistics before you manually run Storage DRS.

svMotion requirements:

- The host must be running a version of ESXi that supports Storage vMotion.

- The host must have write access to both the source datastore and the destination datastore

- The host must have enough free memory resources to accommodate Storage vMotion

- The destination datastore must have sufficient disk space

- The destination datastore must not be in maintenance mode or entering maintenance mode

DRS Troubleshooting

Cluster Problems:

- Load Imbalance on Cluster: A cluster might become unbalanced because of uneven resource demands from virtual machines and unequal capacities of hosts.

- Cause:

- The migration threshold is too high

- VM/VM or VM/Host DRS rules prevent virtual machines from being moved

- DRS is disabled for one or more virtual machines

- A device is mounted to one or more virtual machines preventing DRS from moving the virtual machine in order to balance the load

- Virtual machines are not compatible with the hosts to which DRS would move them.

- It would be more detrimental for the virtual machine’s performance to move it than for it to run where it is currently located

- vMotion is not enabled or set up for the hosts in the cluster

- Cause:

- Cluster is Yellow: The cluster is yellow due to a shortage of resources.

- Cause: A cluster can become yellow if the host resources are removed from the cluster.

- Solution: Add host resources to the cluster or reduce the resource pool reservations.

- Cluster is Red Because of Inconsistent Resource Pool: A DRS cluster becomes red when it is invalid. It may become red because the resource pool tree is not internally consistent.

- Cause: This can occur if vCenter Server is unavailable or if resource pool settings are changed while a virtual machine is in a failover state.

- Solution: Revert the associated changes or otherwise revise the resource pool settings.

- Cluster is Red Because Failover Capacity is Violated.

- Cause: If a cluster enabled for HA loses so many resources that it can no longer fulfill its failover requirements, a message appears and the cluster’s status changes to red.

- Solution: Review the list of configuration issues in the yellow box at the top of the cluster Summary page and address the issue that is causing the problem

- No Hosts are Powered Off When Total Cluster Load is Low because extra capacity is needed for HA failover reservations.

- Cause:

- The MinPoweredOn{Cpu|Memory}Capacity advanced options settings need to be met

- Virtual machines cannot be consolidated onto fewer hosts due to their resource reservations, VM/Host DRS rules, VM/VM DRS rules, not being DRS-enabled, or not being compatible with the hosts having available capacity

- Loads are unstable

- DRS migration threshold is at the highest setting and only allows mandatory moves

- vMotion is unable to run because it is not configured

- DPM is disabled on the hosts that might be powered off

- Hosts are not compatible for virtual machines to be moved to another host

- Host does not have Wake On LAN, IPMI, or iLO technology.

- Cause:

- Hosts are Powered Off When Total Cluster Load is High.

- Cause: This occurs when the total cluster load is too high

- Solution: Reduce the cluster load.

- DRS Seldom or Never Performs vMotion Migrations

- Cause:

- vMotion cluster causes:

- DRS is disabled on the cluster

- The hosts do not have shared storage

- The hosts in the cluster do not contain a vMotion network

- DRS is manual and no one has approved the migration

- DRS seldom vMotion causes:

- Loads are unstable, or vMotion takes a long time, or both. A move is not appropriate

- DRS seldom or never migrates virtual machines

- DRS migration threshold is set too high

- DRS vms moving causes:

- Evacuation of host that a user requested enter maintenance or standby mode

- VM/Host DRS rules or VM/VM DRS rules.

- Reservation violations

- Load imbalance

- Power management

- vMotion cluster causes:

- Cause:

Host Problems:

- DRS Recommends Host be Powered On to Increase Capacity When Total Cluster Load Is Low.

- Cause:

- The cluster is a DRS-HA cluster. Additional powered-on hosts are needed in order to provide more failover capability

- Some hosts are overloaded and virtual machines on currently powered-on hosts can be moved to hosts in standby mode to balance the load

- The capacity is needed to meet the MinPoweredOn{Cpu|Memory}Capacity advanced options

- Solution: Power on the host.

- Cause:

- Total Cluster Load Is High.

- Cause:

- VM/VM DRS rules or VM/Host DRS rules prevent the virtual machine from being moved to this host

- Virtual machines are pinned to their current hosts

- DRS or DPM is in manual mode and the recommendations were not applied

- No virtual machines on highly utilized hosts will be moved to that host

- DPM is disabled on the host because of a user setting or host previously failing to successfully exit standby

- Cause:

- Total Cluster Load Is Low

- Cause:

- DPM detected candidate to power off

- vSphere HA needs extra capacity for failover

- the load is not low enough to trigger the host to power off

- DPM projects that the load will increase

- DPM is not enabled for the host

- DPM threshold is set too high

- While DPM is enabled for the host, no suitable power-on mechanism is present for the host

- DRS cannot evacuate the host

- The DRS migration threshold is at the highest setting and only performs mandatory moves

- Cause:

- DRS Does Not Evacuate a Host Requested to Enter Maintenance or Standby Mode. Cause: vSphere HA is enabled and evacuating this host might violate HA failover capacity

- DRS Does Not Move Any Virtual Machines onto a Host and does not recommend migration of virtual machine to a host that has been added to a DRS-enabled cluster.

- Causes:

- vMotion is not configured or enabled on this host

- Virtual machines on other hosts are not compatible with this host

- The host does not have sufficient resources for any virtual machine.

- Moving any virtual machines to this host would violate a VM/VM DRS rule or VM/Host DRS rule

- This host is reserved for HA failover capacity

- A device is mounted to the virtual machine

- The vMotion threshold is too high

- DRS is disabled for the virtual machines, hence the virtual machine could not be moved onto the destination host.

- Causes:

- DRS Does Not Move Any Virtual Machines from a Host

- Cause:

- vMotion is not configured or enabled on this host

- DRS is disabled for the virtual machines on this host

- Virtual machines on this host are not compatible with any other hosts

- No other hosts have sufficient resources for any virtual machines on this host

- Moving any virtual machines from this host would violate a VM/VM DRS rule or VM/Host DRS rule

- DRS is disabled for one or more virtual machines on the host

- A device is mounted to the virtual machine

- Cause:

VM problems:

- Insufficient CPU or Memory Resources

- VM/VM DRS Rule or VM/Host DRS Rule Violated.

- Cause:

- Cluster Yellow or Red: the capacity is insufficient to meet the resource reservations configured for all virtual machines and resource pools in the cluster

- Resource Limit is Too Restrictive

- Cluster is Overloaded

- Host is Overloaded with conditions:

- The VM/VM DRS rules and VM/Host DRS rules require the current virtual machine-to-host mapping. If such rules are configured in the cluster, consider disabling one or more of them. Then run DRS and check whether the situation is corrected

- DRS cannot move this virtual machine or enough of the other virtual machines to other hosts to free up capacity. DRS will not move a virtual machine for any of the following reasons

- DRS is disabled for the virtual machine

- A host device is mounted to the virtual machine

- Either of its resource reservations is so large that the virtual machine cannot run on any other host in the cluster

- The virtual machine is not compatible with any other host in the cluster.

- Decrease the DRS migration threshold setting and check whether the situation is resolved

- Increase the virtual machine’s reservation

- Solutions:

- Check the DRS faults panel for faults associated with affinity rules

- Compute the sum of the reservations of all the virtual machines in the affinity rule. If that value is greater than the available capacity on any host, the rule cannot be satisfied

- Compute the sum of the reservations of their parent resource pools. If that value is greater than the available capacity of any host, the rule cannot be satisfied if the resources are obtained from a single host.

- Cause:

- Virtual Machine Power On Operation Fails. Solution: If the cluster does not have sufficient resources to power on a single virtual machine or any of the virtual machines in a group power-on attempt, check the resources required by the virtual machine against those available in the cluster or its parent resource pool. If necessary, reduce the reservations of the virtual machine to be powered-on, reduce the reservations of its sibling virtual machines, or increase the resources available in the cluster or its parent resource pool.

- DRS Does Not Move the Virtual Machine.

- cause:

- DRS is disabled on the virtual machine

- The virtual machine has a device mounted

- The virtual machine is not compatible with any other hosts

- No other hosts have a sufficient number of physical CPUs or capacity for each CPU for the virtual machine

- No other hosts have sufficient CPU or memory resources to satisfy the reservations and required memory of this virtual machine

- Moving the virtual machine will violate an affinity or anti-affinity rule

- The DRS automation level of the virtual machine is manual and the user does not approve the migration recommendation

- DRS will not move fault tolerance-enabled virtual machines

- cause: