Amazon EC2 (Elastic Compute Cloud) instances are resizable compute capacity in the cloud. It reduces the time required to obtain and boot new server instances to minutes, and provides the following features:

- Virtual computing environments, known as instances

- Preconfigured templates for your instances, known as Amazon Machine Images (AMIs), that package the bits you need for your server (including the operating system and additional software)

- Various configurations of CPU, memory, storage, and networking capacity for your instances, known as instance types

- Secure login information for your instances using key pairs (AWS stores the public key, and you store the private key in a secure place)

- Storage volumes for temporary data that are deleted when you stop, hibernate, or terminate your instance, known as instance store volumes

- Persistent storage volumes for your data using Amazon Elastic Block Store (Amazon EBS), known as Amazon EBS volumes

- Multiple physical locations for your resources, such as instances and Amazon EBS volumes, known as Regions and Availability Zones

- A firewall that enables you to specify the protocols, ports, and source IP ranges that can reach your instances using security groups

- Static IPv4 addresses for dynamic cloud computing, known as Elastic IP addresses

- Metadata, known as tags, that you can create and assign to your Amazon EC2 resources

- Virtual networks you can create that are logically isolated from the rest of the AWS Cloud and that you can optionally connect to your own network, known as virtual private clouds (VPCs)

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/concepts.html

Price Models

- On-demand:

- Pay a fixed rate by hour (or by second) with no commitments

- Ideal for development purpose where instances could be stopped after development is concluded

- No upfront or long-term payment

- Ideal for applications with short term, spiky or unpredictable workloads

- Reserved:

- Capacity reservation with a significant discount on the hourly charge for instance.

- Terms of contracts are 1 year or 3 years.

- Tip: evaluate the running time before deciding between On-Demand and Reserved

- Ideal for applications with predictable usage

- Type

- Standard (75 off on-demand instances). It’s not possible to convert one instance to another with this type.

- Convertible (54% on-demand capability to change the attributes of the Reserver Instance). It’s possible to convert a type to another

- Scheduled Reserved instances: Decide time window to reserve.

- Spot

- Enables to bid whatever you want for instance capacity providing a saving if application have flexible start and end times

- Application with flexible start and end time

- Feasible at low compute prices

- Useful for urgent computing needs

- Dedicated hosts:

- Physical EC2 server dedicated for single customer use

- Reduces costs by allowing to use of an existing customer license (eg Oracle)

- Useful for regulatory requirements

- Can be purchased on-demand

- Spot Fleet is a collection of Spot Instances and optionally On-Demand Instance. To use a Spot Fleet, you create a Spot Fleet request that includes the target capacity, an optional On-Demand portion, one or more launch specifications for the instances, and the maximum price that you are willing to pay.

- There are two types of Spot Fleet requests:

- Request

- Maintain

- It’s possible to define the following strategies:

- capacityOptimized

- diversified

- lowestPrice

- InstancePoolsToUseCount

- There are two types of Spot Fleet requests:

Types of EC2 instances

- F1 (FPGA): customizable hardware acceleration with field-programmable gate arrays (FPGAs). (Intel Xeon processors with NVMe storage and Enhanced Network Support).

- Use cases: Genomics research, financial analytics, real-time video processing, big data search and analysis, and security

- I3 (IOPS): Storage Optimized with NVMe SSD-backend optimized for low latency, very high random I/O performance.

- I3 also offers Bare Metal instances (i3.metal), powered by the Nitro System, for non-virtualized workloads

- Use cases: NoSQL databases (e.g. Cassandra, MongoDB, Redis), in-memory databases (e.g. Aerospike), scale-out transactional databases, data warehousing, Elasticsearch, analytics workloads.

- G3 (Graphics): optimized for graphics-intensive applications. (Intel Xeon Processor, NVIDIA Testa M60 GPUs Enhanced Networking using the Elastic Network Adapter (ENA) )

- Use cases: 3D visualizations, graphics-intensive remote workstation, 3D rendering, application streaming, video encoding, and other server-side graphics workloads.

- H1 (High Disk Throughput): up to 16 TB of HDD-based local storage with high disk throughput, and a balance of computing and memory.

- Use cases: MapReduce-based workloads, distributed file systems such as HDFS and MapR-FS, network file systems, log or data processing applications such as Apache Kafka, and big data workload clusters.

- T3 (Cheap general purpose like T2.micro): burstable general-purpose instance type that provides a baseline level of CPU performance with the ability to burst CPU usage at any time for as long as required

- T3 instances accumulate CPU credits when a workload is operating below the baseline threshold. Each earned CPU credit provides the T3 instance the opportunity to burst with the performance of a full CPU core for one minute when needed

- Use cases: Micro-services, low-latency interactive applications, small and medium databases, virtual desktops, development environments, code repositories, and business-critical applications

- D2 (Density): instances feature up to 48 TB of HDD-based local storage

- Use cases: Massively Parallel Processing (MPP) data warehousing, MapReduce and Hadoop distributed computing, distributed file systems, network file systems, log or data-processing applications.

- R5 (RAM): optimized for memory-intensive applications

- Use case: are well suited for memory-intensive applications such as high-performance databases, distributed web scale in-memory caches, mid-size in-memory databases, real-time big data analytics, and other enterprise applications.

- M5 (Main choice for general apps): the latest generation of General Purpose Instances powered by Intel Xeon® Platinum 8175M processors.

- Use cases: Small and mid-size databases, data processing tasks that require additional memory, caching fleets, and running backend servers for SAP, Microsoft SharePoint, cluster computing, and other enterprise applications

- C5 (Compute): optimized for compute-intensive workloads and deliver cost-effective high performance at a low price per compute ratio

- Use cases: High-performance web servers, scientific modeling, batch processing, distributed analytics, high-performance computing (HPC), machine/deep learning inference, ad serving, highly scalable multiplayer gaming, and video encoding.

- P3 (Graphics): high performance compute in the cloud with up to 8 NVIDIA® V100 Tensor Core GPUs and up to 100 Gbps of networking throughput for machine learning and HPC applications.

- Use cases: Machine/Deep learning, high-performance computing, computational fluid dynamics, computational finance, seismic analysis, speech recognition, autonomous vehicles, drug discovery.

- X1 (Extreme memory): optimized for large-scale, enterprise-class, and in-memory applications, and offer one of the lowest prices per GiB of RAM among Amazon EC2 instance types

- Use cases: In-memory databases (e.g. SAP HANA), big data processing engines (e.g. Apache Spark or Presto), high-performance computing (HPC). Certified by SAP to run Business Warehouse on HANA (BW), Data Mart Solutions on HANA, Business Suite on HANA (SoH), Business Suite S/4HANA.

- z1D (Extreme memory and CPU): offer both high compute capacity and a high memory footprint. High-frequency z1d instances deliver a sustained all core frequency of up to 4.0 GHz, the fastest of any cloud instance.

- Use cases: Ideal for electronic design automation (EDA) and certain relational database workloads with high per-core licensing costs.

- A1 (ARM): significant cost savings and are ideally suited for scale-out and Arm-based workloads that are supported by the extensive Arm ecosystem

- Use cases: Scale-out workloads such as web servers, containerized microservices, caching fleets, and distributed data stores, as well as development environments

- U Series are bare metals

Source: https://aws.amazon.com/ec2/instance-types/

Launching EC2 instance

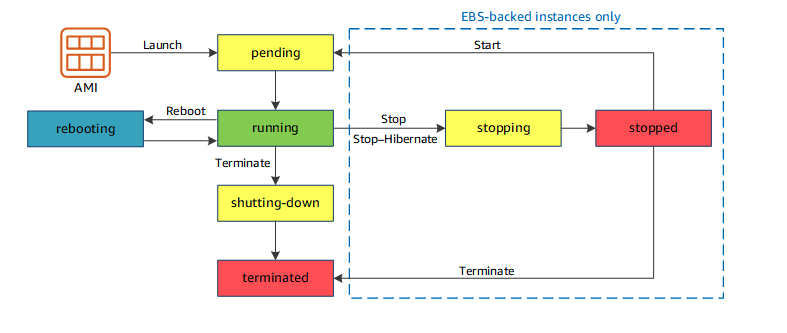

Following instance lifecycle diagram:

| Instance State | Description | Instance usage billing |

| Pending | The instance is preparing to enter the running state. | Not billed |

| Running | The instance is running and ready for use | Billed |

| Stopping | The instance is preparing to be stopped or stop-hibernated | Not billed if preparing to stop

Billed if preparing to hibernate |

| Stopped | The instance is shut down and cannot be used. The instance can be started at any time. | Not Billed |

| Shutting-down | The instance is preparing to be terminated. | Not Billed |

| Terminated | The instance has been permanently deleted and cannot be started. | Not Billed

Reserved instanced are billed until the end of their term according to payment options. |

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-instance-lifecycle.html

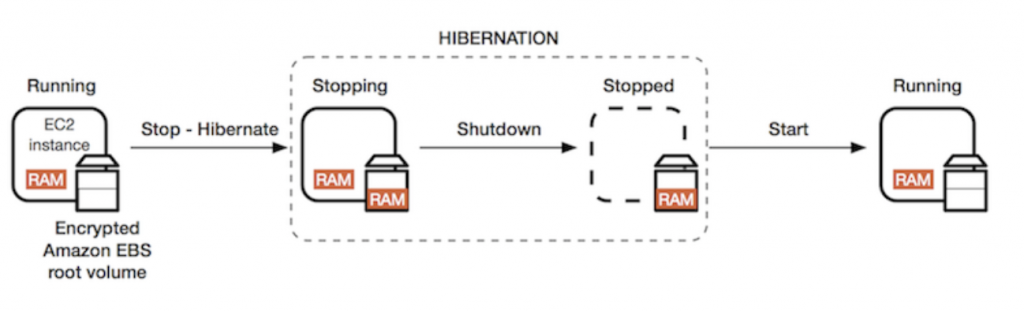

When you hibernate an instance, Amazon EC2 signals the operating system to perform hibernation (suspend-to-disk). Hibernation saves the contents from the instance memory (RAM) to your Amazon Elastic Block Store (Amazon EBS) root volume (Instance RAM must be less than 150GB). Amazon EC2 persists in the instance’s EBS root volume and any attached EBS data volumes.

You’re not charged for instance usage for a hibernated instance when it is in the stopped state. You are charged for instance usage while the instance is in the stopping state when the contents of the RAM are transferred to the EBS root volume

Constrains:

- Instance RAM < 150GB

- Instance family must be: C3,C4,C5,M3,M4,M5,R3,R4,R5

- Available for Windows, Amazon Linux, Ubuntu

- Hibernation state cannot exceed 60 days

- Available for On-demand and Reserved instances

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/Hibernate.html

Creation of an AWS EC2 instance:

- Choose AMI (Amazon machine image):

- AWS Marketplace

- My AMIs

- Community AMIs

- Choose Instance Type

- Configure instance

- Number of instances (Launch into autoscaling group)

- Purchase options to request a Spot instance

- Network:

- VPC (it’s possible to create a new one directly)

- Subnet (depends on the VPC and availability zones)

- Auto-assign public IP

- Placement group (ideal for HPC in order to reduce network latency and increase network speed between instances)

- Clustered Placement Group: instances in a single Availability Zone (indicated for low network latency and high throughput)

- Spread Placement Group: Instanced are spread across multiple distinct underline hardware (every EC2 instance resides on single different hardware) (useful for critical applications that need to ensure availability in case of hardware failure)

- Partitioned: When using partition placement groups, Amazon EC2 divides each group into logical segments called partitions. Amazon EC2 ensures that each partition within a placement group has its own set of racks. (useful to deploy large distributed and replicated workloads, such as HDFS, HBase, and Cassandra, across distinct racks)

- A clustered placement group can’t be spread across different availability zones but spread and partitioned can.

- The placement group name must be unique in the account

- It is applicable only with certain instance types like compute optimized, GPU, Memory optimized, Storage optimized.

- It is NOT possible to merge placement group

- It is possible to move instances in a placement group only when it’s in the stoppped state and only by CLI or AWS SDK.

- Capacity Reservation

- IAM Role

- Shutdown behavior: could be stopped or terminate the instance (see diagram)

- Termination protection (is off by default)

- Monitoring: enable Cloud Watch detailed monitoring

- Tenancy

- Elastic Interface Accelerator

- Bootstrap scripts based on User-Data (Advanced details) (see Bootstrap script section)

- Storage

- EBS (Elastic Block Store) that provides persistent block storage for EC2 instances

- It is automatically replicated with its availability zone (HA and durability)

- Define EBS volumes for Root (where the OS is installed: root for Linux and C drive for Windows)

- Type

- General Purpose gp2 (100/3000 IOPS)

- Provisioned IOPS SSD io1 (user-defined IOPS)

- Magnetic (standard)

- Additional

- Delete on termination, by default is checked

- Encryption ( It’s possible to encrypt volume here and it’s possible to use 3rd party tools)

- Type

- Define additional EBS volumes

- Types

- General Purpose gp2

- Provisioned IOPS SSD io1

- Magnetic (standard)

- Cold HDD (sc1)

- Throughput optimized (st1) Data warehouse (20/123 Throughput in MB/s and 500 MB/s per TB)

- EBS volumes by default are not deleted on termination

- Like the root device, it’s possible to encrypt the EBS volume

- Types

- EBS (Elastic Block Store) that provides persistent block storage for EC2 instances

- Tags: Key-pair values that place multiple labels to instances and volumes (or both)

- Security Groups: Create or assign a security group for this EC2 instance

- Security Groups are a stateful firewall attached to the instance

- By default, all inbound traffic is blocked

- By default, all outbound traffic is allowed

- Changes take effects immediately

- Cardinality

- Any EC2 can have the same security group

- It’s possible to attach multiple security groups per instance

- It’s not possible to block a specific IP: instead, use Network ACL. it’s possible to allow rules, but not deny rules

- Key-pair for SSH connection creation (or use an already existing one). Note: a key-pair could be downloaded only during creation. If you plan to use an already existing key-pair, be sure you have a copy of that.

After creation it’s possible to connect with:

- Standalone SSH client

- EC2 Instance Connect by Browser(based on HTML5)

- Java SSH Client from Browser. (Java client is required)

Monitoring and Status Check

On every EC2 instances, there are 2 main healthy checks:

- System Status Checks: The underline hardware status

- Instance Status Checks. The health of the instance

Default Monitoring shows only:

- CPU Utilization

- Disk Reads

- Disk Reads Operations

- Disk Writes

- Disk Writes Operations

- Network In

- Network Out

- Network Packets In

- Network Packets Out

- Status Check Failed any

- Status Check Failed Instance

- Status Check Failed System

- CPU Credit usage

- CPU Credit Balance

Other metrics could be defined by CloudWatch detailed monitoring.

You can create an Amazon CloudWatch alarm that monitors an Amazon EC2 instance and automatically recovers the instance if it becomes impaired due to an underlying hardware failure or a problem that requires AWS involvement to repair.

When the StatusCheckFailed_System alarm is triggered, and the recovery action is initiated, you will be notified by the Amazon SNS topic that you selected when you created the alarm and associated the recovery action. Case of the status check_failed may be:

- Loss of network connectivity

- Loss of system power

- Software issues on the physical host

- Hardware issues on the physical host that impact network reachability

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-instance-recover.html

Bootstrap Scripts and Instance Metadata-Userdata

When you launch an instance in Amazon EC2, you have the option of passing user data to the instance that can be used to perform common automated configuration tasks and even run scripts after the instance starts. You can pass two types of user data to Amazon EC2:

- Shell Scripts

- If you are familiar with shell scripting, this is the easiest and most complete way to send instructions to an instance at launch

- User data shell scripts must start with the #! characters and the path to the interpreter you want to read the script (commonly /bin/bash)

- Scripts entered as user data are run as the root user, so do not use the sudo command in the script.

- because the script is not run interactively, you cannot include commands that require user feedback (such as yum update without the -y flag).

- Cloud-init directives: can be passed to an instance at launch the same way that a script is passed, although the syntax is different

Instance metadata is data about your instance that you can use to configure or manage the running instance. Instance metadata is divided into categories, for example, hostname, events, and security groups. It is useful to find the IP address assigned to the EC2 instance.

Because your instance metadata is available from your running instance, you do not need to use the Amazon EC2 console or the AWS CLI. This can be helpful when you’re writing scripts to run from your instance.

To view all categories of instance metadata from within a running instance, use the following URI.

|

1 |

http://169.254.169.254/latest/meta-data/ |

To retrieve user data from within a running instance, use the following URI.

|

1 |

http://169.254.169.254/latest/user-data |

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-instance-metadata.html

EBS Insight

As seen before, EBS (Elastic Block Store) provides persistent block storage for EC2 instances. It’s dived into the following categories:

- SSD: Optimized for transactional workloads involving frequent read/write operations with small I/O size, where the dominant performance attribute is IOPS.

- General Purpose (gp)

gp3 gp2 Use Cases - Low-latency interactive apps

- Development and test environments

Volume Size 1 GiB – 16 TiB Max IOPS per volume 16000 Max throughput 1000 MiB/s 250 MiB/s Multi-attach Not supported Boot volume Supported - The performance of

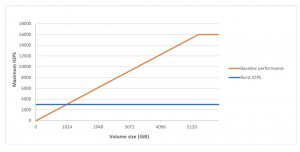

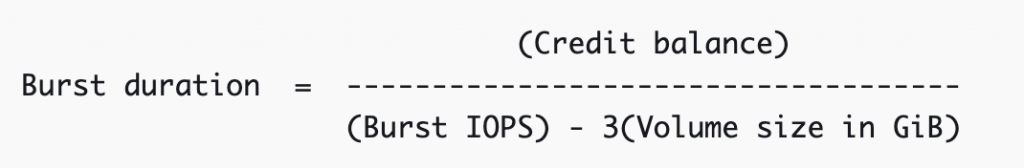

gp2volumes is tied to volume size, which determines the baseline performance level of the volume and how quickly it accumulates I/O credits. I/O credits represent the available bandwidth that yourgp2volume can use to burst large amounts of I/O when more than the baseline performance is needed.Each volume receives an initial I/O credit balance of 5.4 million I/O credits, which is enough to sustain the maximum burst performance of 3,000 IOPS for at least 30 minutes. Volumes earn I/O credits at the baseline performance rate of 3 IOPS per GiB of volume size. For example, a 100 GiBgp2volume has a baseline performance of 300 IOPS.

- When the baseline performance of a volume is higher than maximum burst performance, I/O credits are never spent. If the volume is attached to an instance built on the Nitro System, the burst balance is not reported. The burst duration of a volume is dependent on the size of the volume, the burst IOPS required, and the credit balance when the burst begins. This is shown in the following equation:

- Throughput for a gp2 volume can be calculated using the following formula, up to the throughput limit of 250 MiB/s:

- Throughput in MiB/s = ((Volume size in GiB) × (IOPS per GiB) × (I/O size in KiB))

- Provisioned IOPS (io)

io2 Block Express io2 io1 Use Cases Workloads that require sub-millisecond latency, and sustained IOPS performance or more than 64,000 IOPS or 1,000 MiB/s of throughput High durability (like io2 Block Express) - Workloads that require sustained IOPS performance or more than 16,000 IOPS

- I/O-intensive database workloads

- Workloads that require sustained IOPS performance or more than 16,000 IOPS

- I/O-intensive database workloads

Volume Size 4 GiB – 64 TiB 4 – 16 TiB Max IOPS per volume 256000 64000 Max throughput 4000 MiB/s 1000 MiB/s Multi-attach Not supported Supported Boot volume Supported

- General Purpose (gp)

- HDD: Optimized for large streaming workloads where the dominant performance attribute is throughput.

-

Throughput Optimized HDD – st1 Cold HDD – sc1 Use Cases low-cost HDD designed for frequently accessed, throughput-intensive workloads. Useful for Big data, Data warehouses, Log processing

The lowest-cost HDD design for less frequently accessed workloads. Useful for: - Throughput-oriented storage for data that is infrequently accessed

- Scenarios where the lowest storage cost is important

Volume Size 125 GiB – 16 TiB 125 GiB – 16 TiB Max IOPS per volume 500 250 Max throughput 500 MiB/s 250 MiB/s Multi-attach Not supported Boot volume Not supported

-

- Previous gen: can be used for workloads with small datasets where data is accessed infrequently and performance is not of primary importance. It’s recommended that you consider a current generation volume type instead.

-

Magnetic – Standard Use Cases Workloads where data is infrequently accessed Volume Size 1 GiB – 1 TiB Max IOPS per volume 40-200 Max throughput 40-90 MiB/s Multi-attach Not supported Boot volume Not supported

-

- EBS volumes are in the same availability zone of the EC2 instance

- It’s possible to modify online

- The type of the volume

- The size of the volume

- Depending on the type of volume it’s possible to change the IOPS

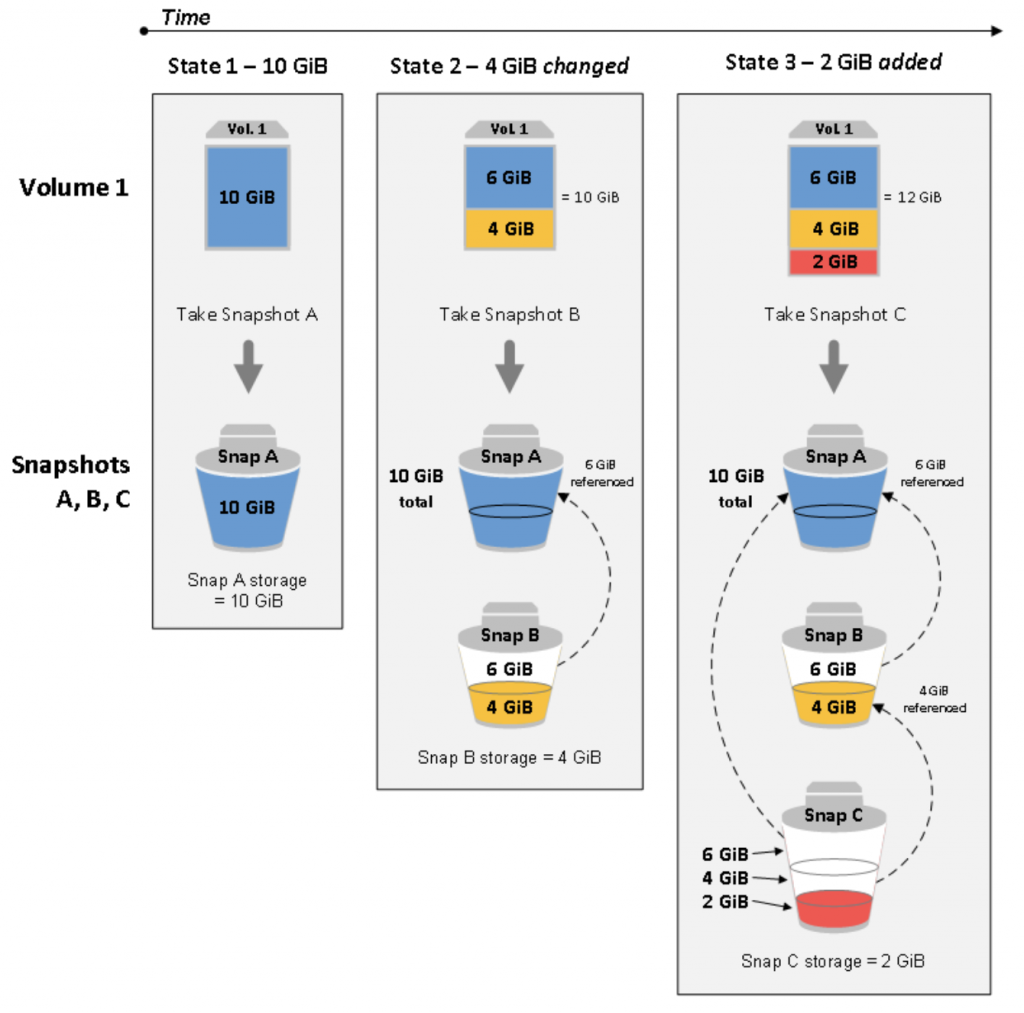

- Snapshots are incremental backups, which means that only the blocks on the device that have changed after your most recent snapshot are saved. This minimizes the time required to create the snapshot and saves on storage costs by not duplicating data. When you create an EBS volume based on a snapshot, the new volume begins as an exact replica of the original volume that was used to create the snapshot. When you delete a snapshot, only the data unique to that snapshot is removed.

- You can track the status of your EBS snapshots through CloudWatch Events

- Snapshot exists in S3 bucket

- Multi-volume snapshots allow you to take exact point-in-time, data coordinated, and crash-consistent snapshots across multiple EBS volumes attached to an EC2 instance

- Charges for your snapshots are based on the amount of data stored

- A snapshot is taken of each of these three volume states:

- the snapshot has the same size as the original volume.

- During the time the volume can have the same size but a portion of that is changed. So the second snapshot will contain only the changes from the previous snapshot that is directly referenced to the previous snapshot.

- In the case of volume expansion, the last snapshot is referenced to the first snapshot and the last snapshot and is sized as the amount of expanded storage.

- You can share a snapshot across AWS accounts by modifying its access permissions.

- You can make copies of your own snapshots as well as snapshots that have been shared with you.

- A snapshot is constrained to the AWS Region where it was created. After you create a snapshot of an EBS volume, you can use it to create new volumes in the same Region. You can also copy snapshots across Regions, making it possible to use multiple Regions for geographical expansion, data center migration, and disaster recovery. You can copy any accessible snapshot that has a completed status.

- EBS snapshots fully support EBS encryption:

- Snapshots of encrypted volumes are automatically encrypted

- Volumes that you create from encrypted snapshots are automatically encrypted

- Volumes that you create from an unencrypted snapshot that you own or have access to can be encrypted on-the-fly.

- When you copy an unencrypted snapshot that you own, you can encrypt it during the copy process.

- When you copy an encrypted snapshot that you own or have access to, you can re-encrypt it with a different key during the copy process (The first snapshot you take of an encrypted volume that has been created from an unencrypted snapshot is always a full snapshot; the first snapshot you take of a re-encrypted volume, which has a different CMK compared to the source snapshot, is always a full snapshot)

- (source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSSnapshots.html)

- In order to migrate data from one availability zone into another you must:

- Take snapshot

- Create an image (AMI) from the EBS snapshot. During image creation you can change the following parameters:

- Architecture

- Root device name

- RAM Disk ID

- Description

- Virtualization type (Paravirtual or HVM)

- Kernel ID

- Launch EC2 instance from the created image.

- It’s possible to migrate data into another region with the following steps:

- Take snapshot

- Create image from EBS snapshot

- Copy Image into another Region with “Copy AMI”

- It is possible also move into another availability zone with the same action

- It’s not possible to delete a snapshot if it’s used as AMI

AMI

An Amazon Machine Image (AMI) provides the information required to launch an instance. You must specify an AMI when you launch an instance. You can launch multiple instances from a single AMI when you need multiple instances with the same configuration.

An AMI includes the following:

- One or more Amazon Elastic Block Store (Amazon EBS) snapshots, or, for instance-store-backed AMIs, a template for the root volume of the instance (for example, an operating system, an application server, and applications).

- Launch permissions that control which AWS accounts can use the AMI to launch instances.

- A block device mapping that specifies the volumes to attach to the instance when it’s launched.

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AMIs.html

You can select an AMI to use based on the following characteristics:

- Region (see Regions and Zones)

- Operating system

- Architecture (32-bit or 64-bit)

- Launch permissions

- public: The owner grants launch permissions to all AWS accounts.

- explicit: The owner grants launch permissions to specific AWS accounts.

- implicit: The owner has implicit launch permissions for an AMI.

- Storage for the root device

- All AMIs are categorized as either backed by Amazon EBS or backed by instance store (aka Ephemeral Storage).

-

Characteristic Amazon EBS-backed AMI Amazon instance store-backed AMI Boot time for an instance < 1min < 5min Size limit for a root device 16TB 10GB Root device volume EBS volume Instance Store volume Data persistence By default, the root volume is deleted when the instance terminates Data on any instance store volumes persists only during the life of the instance Modifications The instance type, kernel, RAM disk, and user data can be changed while the instance is stopped Instance attributes are fixed for the life of an instance. Charges You’re charged for instance usage, EBS volume usage, and storing your AMI as an EBS snapshot. You’re charged for instance usage and storing your AMI in Amazon S3. AMI creation/bundling Uses a single command/call Requires installation and use of AMI tools Stopped state Can be in a stopped state. Even when the instance is stopped and not running, the root volume is persisted in Amazon EBS Cannot be in stopped state; instances are running or terminated

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ComponentsAMIs.html

EC2 Networking: ENI, ENA, EFA

Amazon VPC enables you to launch AWS resources, such as Amazon EC2 instances, into a virtual network dedicated to your AWS account, known as a virtual private cloud (VPC).

Amazon EC2 and Amazon VPC support both the IPv4 and IPv6 addressing protocols. By default, Amazon EC2 and Amazon VPC use the IPv4 addressing protocol; you can’t disable this behavior.

- A private IPv4 address is an IP address that’s not reachable over the Internet. You can use private IPv4 addresses for communication between instances in the same VPC

- When you launch an instance, we allocate a primary private IPv4 address for the instance. Each instance is also given an internal DNS hostname that resolves to the primary private IPv4 address; for example, ip-10-251-50-12.ec2.internal.

- A public IP address is an IPv4 address that’s reachable from the Internet. You can use public addresses for communication between your instances and the Internet.

- When you launch an instance in a default VPC, we assign it a public IP address by default.

- You can control whether your instance receives a public IP address as follows:

- Modifying the public IP addressing attribute of your subnet

- Enabling or disabling the public IP addressing feature during launch, which overrides the subnet’s public IP addressing attribute

- You can specify multiple private IPv4 and IPv6 addresses for your instances. The number of network interfaces and private IPv4 and IPv6 addresses that you can specify for an instance depends on the instance type

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-instance-addressing.html

Other doc about multiple IP: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/MultipleIP.html

An Elastic Network Interface (ENI) is a logical networking component in a VPC that represents a virtual network card. It can include the following attributes:

- A primary private IPv4 address from the IPv4 address range of your VPC

- One or more secondary private IPv4 addresses from the IPv4 address range of your VPC

- One Elastic IP address (IPv4) per private IPv4 address

- One public IPv4 address

- One or more IPv6 addresses

- One or more security groups

- A MAC address

- A source/destination check flag

- A description

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-eni.html

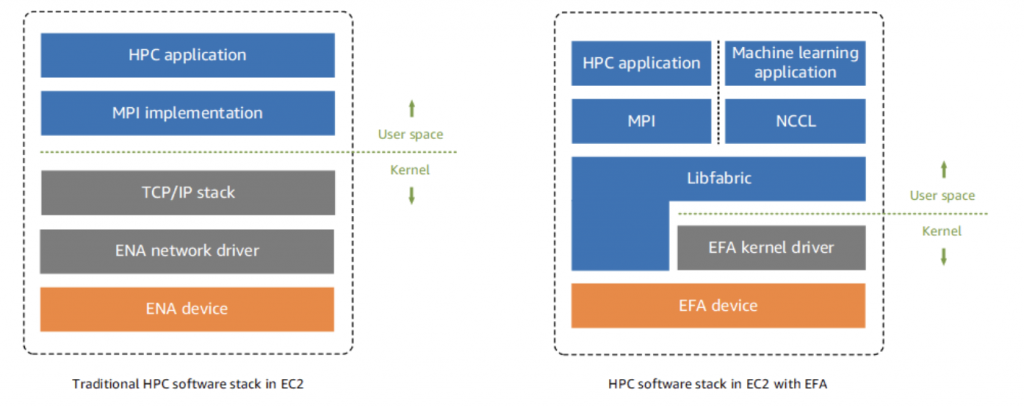

Enhanced networking uses single root I/O virtualization (SR-IOV) to provide high-performance networking capabilities on supported instance types. No further charge using this type of network interface but EC2 must support it. Depending on instance type it’s possible two Enhanced Networking:

- ENA – Elastic Network Adapter (up to 100Gbps)

- VF – Virtual Function (up to 10Gbps)

Amazon EC2 provides enhanced networking capabilities through the Elastic Network Adapter (ENA). To use enhanced networking, you must install the required ENA module and enable ENA support. To prepare for enhanced networking using the ENA, set up your instance as follows:

- Launch the instance using a current generation instance type, other than C4, D2, M4 instances smaller than m4.16xlarge, or T2

- Launch the instance using a supported version of the Linux kernel and a supported distribution, so that ENA enhanced networking is enabled for your instance automatically.

- Ensure that the instance has internet connectivity.

- Install and configure the AWS CLI or the AWS Tools for Windows PowerShell on any computer you choose, preferably your local desktop or laptop

- If you have important data on the instance that you want to preserve, you should back that data up now by creating an AMI from your instance

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/enhanced-networking-ena.html

An Elastic Fabric Adapter (EFA) is a network device that you can attach to your Amazon EC2 instance to accelerate High-Performance Computing (HPC) and machine learning applications. EFA enables you to achieve the application performance of an on-premises HPC cluster, with the scalability, flexibility, and elasticity provided by the AWS Cloud.

It provides all of the functionality of an ENA, with an additional OS-bypass functionality.

Source: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/efa.html