After a little from the announcement of the availability of version 7.0.1, another cool feature like the QNAP integration is go out in this days. We already know the package availability for Synology and WesternDigital NAS; this 3rd integration demonstrates the focus for Nakivo to make this backup solution more and more available for small and mid-range companies too.

All details about QNAP package are available here: https://www.nakivo.com/backup-appliance/qnap-nas/

A note Updating to newest release

For whom already installed Virtual Appliance version < 7.0.1, it’s possible to make a release update simply:

- downloading the update file here https://www.nakivo.com/en/download/update.html

- uploading the update file into directory /opt/nakivo/updates

- in VA console, selecting software update –> the updater filename previously upload

- and finally, after accepting the agreement, the update process will proceed.

Note: obviously don’t execute the update during backup or restore activities.

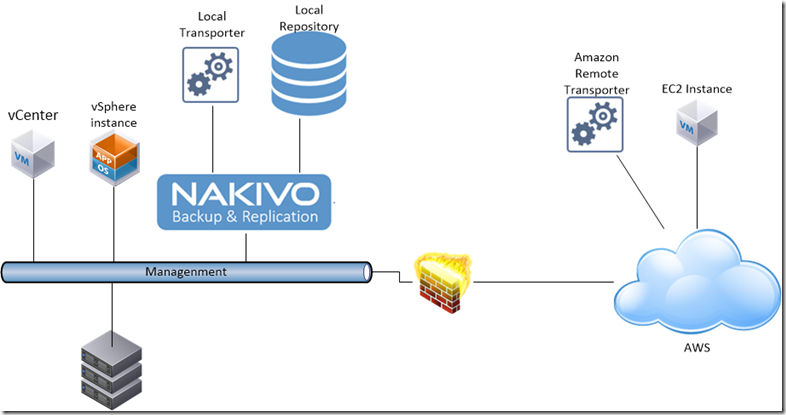

Cross cloud protection

Working in cloud means take care on your data, even if your cloud provider makes you available many protection problem. More and more cloud infrastructures provide in many ways a kind of protection that sometimes are locked inside the AWS provider itself. The new IT best practices are focused on the data governance: hybrid cloud is the preferred way to take your data under control using external cloud only as a supplier of resources when my infrastructure is not able to handle the classic “workload explosion”. For this reason in every scenarios is important find a way to “give back” your workload in case of request reduction to save costs and be sure that data are “back to home”.

In this example I’ll show how to recovery a production data into in premise workload using Nakivo File Level Restore.

Requirements:

- An AWS Account

- An on-premise infrastructure (in my case vSphere)

- Nakivo VA ova images

- 2 VM (one in AWS EC2 and other in vSphere environment)

- A shared folder configured in vSphere VM (it must be the destination folder for restored data)

Keep in mind that Nakivo VA default authentication is root/root.

Starting on-premise from scratch

Nakivo VA installation is very simple. In some cases, to accelerate the deployment, it could be useful the use of powercli:

|

1 2 3 4 5 |

Connect-VIServer –Server [vcenter] $ovfconfig = Get-OvfConfiguration C:\path\top\NAKIVO_Backup_Replication_VA.ova $ovfconfig.NetworkMapping.VM_Network.Value = "[pg_name]" Import-VApp -Source C:\path\top\NAKIVO_Backup_Replication_VA.ova -OvfConfiguration $ovfconfig -Name "Nakivo" -Datastore (Get-Datastore –Name [datastore]) -Location (Get-Cluster [cluster]) -VMHost (Get-VMHost –Name [host]) Start-VM –Name "Nakivo" |

After setting up IP and admin username, you’re ready start Inventory and configure backup jobs.

Configure Nakivo

Assuming you’ve a couple of EC2 instances up and running you should create AWS access for Nakivo. Simply go in IAM Resources and create user with right policy, as described in my previous post here: https://blog.linoproject.net/nakivo-v-7-beta-and-ec2-replication/.

The procedure:

- Add new inventory with AWS Account with access key ID

- Add the local vCenter as new inventory providing SSO credentials with enough privileges to backup and restore you vDC.

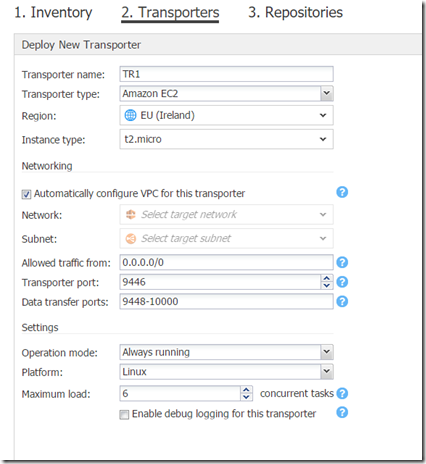

- Deploy AWS EC2 transporter

- Configure Local storage as Backup repository

To mount the shared folder to Nakivo VA you could use linux mounting command, connecting to appliance via ssh or using Invoke-VMScript powercli cmdlet:

|

1 2 |

Invoke-VMScript -VM "Nakivo" -GuestUser root -GuestPassword root -ScriptText "mkdir /mnt/flr_vm_test" Invoke-VMScript -VM "Nakivo" -GuestUser root -GuestPassword root -ScriptText "mount -t cifs //[vmip]/FLR /mnt/flr_vm_test -o user=[vmuser],password=[vmpassword]" |

After backup, an unconventional File Level Restore

The backup configuration requires really few steps: After choosing the source VM from inventory, configuring scheduling and repository (keep local in this case), you’re ready to start backup and wait the complete.

Now it’s time to start FLR:

- First of all selct Jobs –> Recover and choose “Individual Files”

- Choose via Backup Repositories View –> On board repository –> the EC2 backup instance (with the correct id)

- Select the restore point

- Click Next and after directory visualization, take note about path to file or directory to restore, then move to PowerCLI

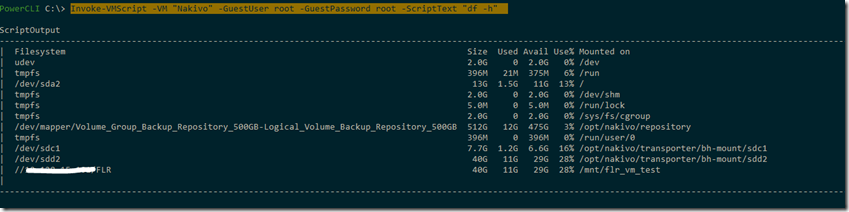

Via powercli is possible to restore by choosing the right directory that is available under /opt/nakivo/transporter :

|

1 |

Invoke-VMScript -VM "Nakivo" -GuestUser root -GuestPassword root -ScriptText "df -h" |

Checking the existence in correct mount point, copy the desired file or directory into running in premise VM.

|

1 |

Invoke-VMScript -VM "Nakivo" -GuestUser root -GuestPassword root -ScriptText "[ -d /opt/nakivo/transporter/bh-mount/sdc1/home/ubuntu ] && cp -a /opt/nakivo/transporter/bh-mount/sdc1/home/ubuntu /mnt/flr_vm_test" |

Now backup data are into on-premise machine.

Final thoughts

Nakivo could protect essentially 3 kind of workload: from vSphere (vCloud included), from Amazon EC2 and from HyperV. Due to the nature of the workload itself it’s not possible to make natively a cross cloud replications; but the use of FLR, showed in this case, could be an alternative way to do reach this goal!